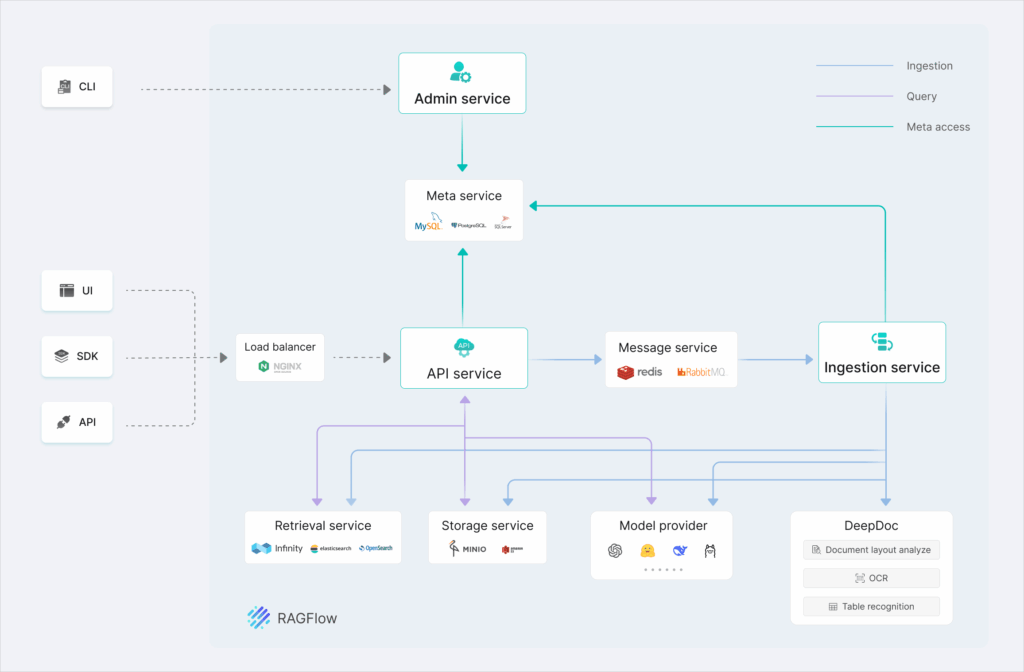

RAGFlow is a 100% open-source RAG (Retrieval-Augmented Generation) and agent engine you can run on-prem (no data exfiltration) to query, reason over, and cite information from PDF/DOCX/HTML/images/tables and structured sources. It deploys via Docker Compose, indexes in Elasticsearch (or Infinity), and orchestrates embeddings + re-ranking + LLM through a visual flow UI and APIs. For systems and application teams, this enables corporate search, support assistants, product copilots, or text-to-SQL—while keeping governance, cost, and latency under your control.

This guide focuses on operating RAGFlow: architecture, deployment, hardening, search tuning, local LLM integration, observability, runbooks, and real-world gotchas.

1) Operational architecture (the moving parts you’ll own)

- RAGFlow Server (API/UI, flow engine, and agents)

- Document engine: Elasticsearch by default (text + vectors), Infinity as an alternative

- Object storage (MinIO optional in the dev stack)

- Redis/MySQL (base services per compose)

- Embeddings: included in the full image; the slim image expects an external/local service

- LLM: configurable (OpenAI-compatible API). Point it to Ollama/LM-Studio/vLLM on your network

CPU/RAM: 4 vCPU/16 GB is a practical minimum; for large ingests and OCR/DeepDoc plan on 8–16 vCPU and 32 GB.

ARM64: no official images—build your own.

2) Reproducible deployment (with guardrails)

Prereqs

# kernel setting for ES/Infinity

sudo sysctl -w vm.max_map_count=262144

echo "vm.max_map_count=262144" | sudo tee -a /etc/sysctl.conf

# Docker/Compose ≥ 24 / 2.26

Code language: PHP (php)Bring-up (CPU)

git clone https://github.com/infiniflow/ragflow.git

cd ragflow/docker

# Slim edition (≈2 GB, no embeddings)

docker compose -f docker-compose.yml up -d

# GPU (if you have CUDA): docker compose -f docker-compose-gpu.yml up -d

docker logs -f ragflow-server

Code language: PHP (php)Browse to http://SERVER_IP (port 80 by default) and create the first user.

Pick your LLM and credentials

Edit service_conf.yaml.template → user_default_llm and API_KEY.

- Local LLM (Ollama with OpenAI-compatible bridge, LM-Studio Server, or vLLM):

BASE_URL: "http://ollama:11434/v1" API_KEY: "local-key" MODEL: "qwen2.5-7b-instruct" # or whichever model you serve - Embeddings: the full image (

infiniflow/ragflow:v0.21.0) already includes them; on slim configure your embeddings endpoint or switch images.

TLS: in production, place RAGFlow behind nginx/Traefik with Let’s Encrypt and enforce HTTPS.

3) Governance & security (quick hardening)

- Exposed surface: only 80/443 (or your reverse-proxy port). Redis/MySQL/ES/Infinity stay on the Docker internal network.

- Auth: enforce strong password policy, disable open signup, and use 2FA at the reverse proxy if applicable.

- Isolation: run on a dedicated VM or Docker namespace with resource limits (

deploy.resourcesin Compose). - Egress: for air-gapped environments, use local LLM + embeddings and disable web search.

- Backups: schedule snapshots for ES/Infinity + dumps for MySQL/Redis and back up

service_conf.yaml.template. - Logs/retention: ship to syslog/ELK/Vector and set GDPR-compliant retention.

4) Quality RAG pipeline (avoid demo-driven development)

RAGFlow lets you see and edit chunking, combine BM25 + vectors, and apply re-ranking. For a reliable system:

- Ingestion

- Enable DeepDoc for complex/scanned PDFs (tables/images).

- Define chunking templates (title+section; size 400–800 tokens; 10–20% overlap).

- Dedup by hash/ID; version documents.

- Retrieval

- Use multi-recall: vectors (top-k 20–50) + BM25 (top-k 20) → fused re-ranking.

- Tune k for recall/latency; re-ranking collapses to 5–8 passages.

- Generation

- Prompt with citation requirements and token limits; instruct the model to answer only from retrieved passages.

- Evaluation

- Build a golden set of 30–50 real questions.

- Measure Exact Match, Groundedness (is the claim supported by the citation?), latency, and fallback behavior.

Guardrail prompt (sketch):

Answer ONLY using the retrieved passages and cite with [n].

If context is missing, reply “Not present in sources”.

Code language: JavaScript (javascript)5) Search & indexing tuning

Elasticsearch

- Heap: 50% of container RAM (cap at 31 GB)

- Shards: 1–3 primary depending on volume; 0–1 replica for single-node

- KNN: enable dense_vector/HNSW if supported by your build

- BM25: language-specific analyzers; synonyms if needed

Infinity

- Set

DOC_ENGINE=infinityindocker/.envand re-ingest (notedown -vwipes data) - Not officially supported on Linux/arm64

Re-ranking

- Turn it on: typically +10–20% accuracy for modest overhead.

6) Integrating with your applications

- APIs: RAGFlow exposes REST endpoints for collections, ingest, chat, and deep-search. Front them with your own API for audit, tenant-scoped authz, and rate limits.

- Text-to-SQL: connect a read-only warehouse for natural-language queries over documented tables.

- Events: log queries/responses/citations (for playback and targeted re-training).

- Multi-tenant: separate by collections/namespaces; for hard isolation, run another instance.

7) Local LLM without OpenAI

Common options:

- Ollama + OpenAI-compatible bridge (

http://ollama:11434/v1) → models likeqwen2.5-7b/14b,llama-3.1-8b,mistral-7b - LM Studio Server or vLLM serving an instruct model

- text-generation-webui with OpenAI compatibility

Configure BASE_URL and API_KEY in service_conf.yaml.template. The full image already includes embeddings, so your pipeline stays local.

Performance: if you have a GPU, use the gpu compose to accelerate DeepDoc/OCR and embeddings; expect 2–5× latency improvements on medium workloads.

8) Observability, SLAs, and runbooks

- Metrics: Prometheus + node_exporter; latency of /api/chat, ingest queues, OCR time, index size/health, ES heap.

- Alerts: ES yellow/red, bad

vm.max_map_count, LLM errors, Let’s Encrypt cert ≤15 days to expiry. - Backups:

- ES/Infinity: daily snapshots (S3 or external FS) with 7/30/90 retentions

- DB/Redis/config: daily encrypted dumps off-box

- Updates: change image tag (

v0.21.0 → v0.21.1),docker compose pull && up -d, validate with the golden set - DR: fresh VM → restore snapshots → incremental re-index if needed. Target RTO < 60 min (panel/API).

9) Common gotchas (and fixes)

- “Network error” on first login → wait for

ragflow-serverbanner; initial boot can take a few minutes - OCR/DeepDoc fails → insufficient RAM/GPU; switch to gpu compose or reduce ingest batch size

- ES crashes → increase heap and ensure

vm.max_map_count; keep ≥20% free disk - ARM64 → build your own image; recompile deps (no official image)

- LLM unreachable →

curl $BASE_URL/v1/models -H "Authorization: Bearer $API_KEY"; check CORS/network - High latency → lower

top-k, enable re-ranking, cache answers, use smaller embeddings

10) Production checklist

- TLS enforced with HSTS behind a reverse proxy

- Local LLM/embeddings or vendors under DPA with scoped access

- Backups tested; restoration plan documented

- Observability (metrics/logs) and alerts in place

- Golden set and quality thresholds agreed with the business

- Data retention and classification (confidential/PII)

- RBAC per collection/tenant and rate-limit per token/IP

- Update plan (staging → prod) and maintenance windows

11) Why RAGFlow vs. “build it by hand”?

- Less glue: you get a flow UI, chunking templates, DeepDoc, and agents out of the box—no need to stitch Haystack/LangChain + retrievers + OCR + UI from scratch

- Operable: single compose stack; clear ES/inference tuning points

- Traceable citations: reduces friction with compliance and audit

- Local-first: can run air-gapped without losing key features

12) Real admin use-cases in 1–2 days

- NOC/Support: ingest runbooks, KB, and post-mortems; “Deep Search” agent with citations and actionable snippets

- App owners: text-to-SQL over the product warehouse; audit queries and table lineage

- Legal/Compliance: contract analysis (OCR) with assisted redlining and clause extraction

Conclusion

For systems and application administrators, RAGFlow delivers what we needed: a robust, local-first RAG/Agent stack that deploys and operates like any serious service (TLS, backups, metrics), with verifiable citations and true multimodality. It won’t replace your CMDB or SIEM, but it becomes the context layer your organization was missing to query corporate knowledge inside your perimeter—without opaque per-token costs.

If you already have Docker and a domain, you can have “your ChatGPT for documents” running on your network today. The rest—tuning, governance, and SLOs—is familiar ground for a good admin.

Source: Noticias inteligencia artificial