Compression in in-memory databases like Redis and Valkey can be the difference between a cluster that sails through traffic spikes and one that chokes on latency, CPU, or memory. Choosing the right codec—and where to apply it—isn’t trivial: compressing “hot” cache values is not the same as compressing persistence files, and compressing JSON/HTML differs from compressing already-compressed binaries (images, ZIP, video). This article summarizes which codecs matter today (LZ4, Zstd, Gzip, Brotli, etc.), where to apply them in Redis/Valkey, and when each option pays off.

Where to compress in Redis/Valkey (and where not)

Before codecs, understand the layers where compression usually lives:

- Values (application/client-side)

- Most common in production: compress the payload (JSON, HTML, blobs) in the client before

SET/HSET, and decompress afterGET. - Pros: full control (codec & level), easy feature-flag, saves RAM and bandwidth.

- Cons: CPU in the application and extra latency if you overdo the level.

- Most common in production: compress the payload (JSON, HTML, blobs) in the client before

- Persistence (RDB/AOF)

- Redis/Valkey can compress snapshots (RDB) (historically via LZF) and you can compress AOF out of band.

- Pros: smaller disk footprint and faster backups.

- Cons: doesn’t help hot in-memory usage; mind CPU during save/load.

- Network traffic

- Typically do not compress RESP on the wire in production (TLS already adds overhead; transport-level compression rarely pays off).

- For WAN or inter-DC links, consider proxying with selective compression (large objects only).

Rule of thumb: compress in the client if the value exceeds a size threshold (e.g., > 1–2 KiB) and is highly repetitive (JSON/HTML/CSV/Protobuf), with LZ4 when latency is paramount or Zstd when you want more savings at reasonable cost.

Codec comparison table

Ratios are indicative for text-like payloads (JSON/HTML/logs). With already-compressed binaries (JPEG, MP4, ZIP), ratios drop and you often want no compression.

| Method | Estimated ratio | Practical example | Speed | Recommended scenario |

|---|---|---|---|---|

| Identity | 1× (no compression) | Binary 10 MB → 10 MB | Max | Non-compressible data, ultra-low latency |

| LZ4 | 1.10× – 1.20× | Text 10 MB → 8.5–9 MB | Extremely high | High-QPS caches (WordPress, APIs) |

| Zstd | 1.40× – 1.50× | Text 10 MB → 6.5–7 MB | Very high | Pro cache, best “space vs speed” balance |

| Gzip | 1.35× – 1.45× | Text 10 MB → 7–7.5 MB | High | Universal compatibility |

| Brotli | 1.45× – 1.60× | Web assets 10 MB → 6–7 MB | Low–medium | Web/HTTP optimization (not typical for hot RAM) |

| Bzip2 | 1.50× – 1.60× | Text 10 MB → 6.2–6.6 MB | Very low | Backups / cold storage |

| LZF | 1.10× – 1.15× | Text 10 MB → 8.7–9 MB | Extremely high | Legacy/compatibility; modest gains |

| LZMA | 1.60× – 2.0× | Text 10 MB → 5–6.5 MB | Very low | Offline files, space above all else |

| Zlib | 1.35× – 1.45× | Text 10 MB → 7–7.5 MB | High | Classic/mixed platforms |

Quick takeaways:

- LZ4 = “latency first”.

- Zstd = “modern default balance”.

- Gzip/Zlib = “universal compatibility”.

- Brotli/LZMA/Bzip2 = “max ratio, not for real-time”.

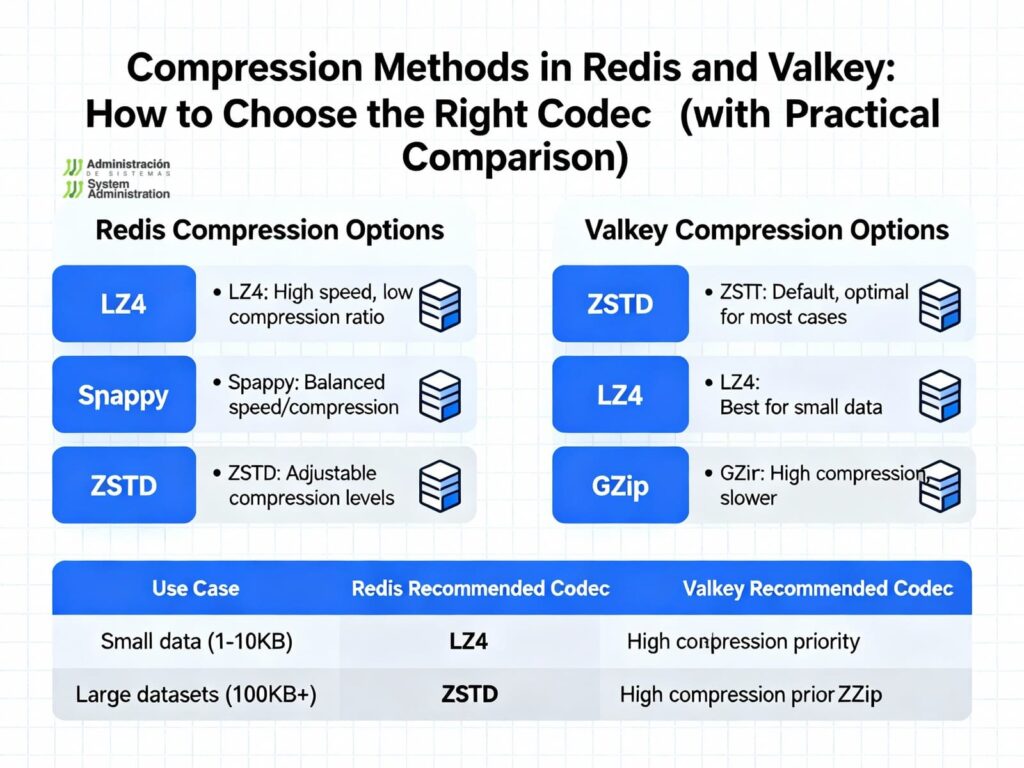

Redis vs Valkey: what really changes?

- In production, value compression is typically done client-side (middleware/SDK/proxy).

- Redis and Valkey share the ecosystem and codec availability via clients, middleware, and modules. The most common cache choices are

lz4andzstd;lzfpersists for compatibility or very tight CPU;nonesuits ultra-low latency or already-compressed data. - Valkey often exposes a broader set of compressors via integrations (e.g., django-valkey), while Redis clients show the same usual suspects. In practice, LZ4/Zstd behave equivalently on both.

Operational decision rules

- Pick a size threshold

- e.g., don’t compress values < 1 KiB; compress ≥ 2–4 KiB.

- Use Identity if the content is already compressed (detect via headers/magic bytes).

- Choose codec by objective

- Low p99/p999 → LZ4 (default level): excellent throughput and latency.

- RAM/egress savings without killing CPU → Zstd (levels 1–6; avoid 15+ except batch/offline).

- Compatibility → Gzip/Zlib.

- Cold storage/backup → Brotli/LZMA/Bzip2 (off the hot path).

- Avoid double compression

- Detect Content-Encoding / magic bytes (GZIP header, ZIP EOCD, JPEG SOI).

- Observability is mandatory

- Track ratio, (de)compression time, Redis RTT p95/p99, and CPU.

- If p99 rises, lower Zstd level or switch to LZ4.

Integration examples

Python (Redis/Valkey) with Zstandard & threshold

import redis

import zstandard as zstd

r = redis.Redis(host="localhost", port=6379)

ZSTD_LEVEL = 3

THRESHOLD = 2048 # 2 KiB

cctx = zstd.ZstdCompressor(level=ZSTD_LEVEL)

dctx = zstd.ZstdDecompressor()

def kv_set(key: str, data: bytes):

if len(data) >= THRESHOLD:

compressed = cctx.compress(data)

r.set(key, b"\x28ZSTD\x29" + compressed) # tiny header marker

else:

r.set(key, data)

def kv_get(key: str) -> bytes | None:

val = r.get(key)

if not val:

return None

if val.startswith(b"\x28ZSTD\x29"):

return dctx.decompress(val[len(b"\x28ZSTD\x29"):])

return val

Code language: PHP (php)Node.js with LZ4 (latency-critical)

const Redis = require("ioredis");

const lz4 = require("lz4");

const r = new Redis();

const THRESHOLD = 2048;

const MAGIC = Buffer.from([0x28, 0x4c, 0x5a, 0x34, 0x29]); // (LZ4)

function setLZ4(key, buf) {

if (buf.length < THRESHOLD) return r.setBuffer(key, buf);

const max = lz4.encodeBound(buf.length);

const out = Buffer.allocUnsafe(MAGIC.length + max);

MAGIC.copy(out, 0);

const n = lz4.encodeBlock(buf, out, MAGIC.length);

return r.setBuffer(key, out.subarray(0, MAGIC.length + n));

}

async function getLZ4(key) {

const val = await r.getBuffer(key);

if (!val) return null;

if (val.subarray(0, MAGIC.length).equals(MAGIC)) {

const src = val.subarray(MAGIC.length);

// Prefer using LZ4 frames in production to include original size + CRC

}

return val;

}

Code language: JavaScript (javascript)Django-Valkey (configuring a compressor)

VALKEY = {

"BACKENDS": {

"default": {

"HOST": "127.0.0.1",

"PORT": 6379,

"DB": 0,

"COMPRESSION": {

"ALGORITHM": "zstd", # "lz4", "zstd", "gzip", "none", ...

"LEVEL": 3,

"THRESHOLD": 2048, # bytes

"HEADER": True # mark format/version

}

}

}

}

Code language: PHP (php)Micro-bench: how to evaluate in your environment

- Build representative datasets:

- JSON (1–50 KiB), HTML, logs, non-compressible binary blobs, and a real traffic mix.

- Measure per codec and level:

- Ratio (bytes_in/bytes_out), t_comp / t_decomp, Redis RTT p50/p95/p99, CPU (app/host).

- Try THRESHOLD = {1 KiB, 2 KiB, 4 KiB} and QPS bursts (read-heavy 80–95% typical for caches).

- Compare LZ4 (default) and Zstd (levels 1–6).

- If p99 rises >10–15% vs Identity, lower level or switch to LZ4.

- If RAM is the bottleneck, raise Zstd to 3–5 and validate CPU.

Common scenarios (what to pick)

- WordPress/HTML/API caches with high QPS → LZ4 (or Zstd-1 if p99 allows).

- JSON/Protobuf catalogs with long TTLs → Zstd-3/4 (save RAM and egress).

- Events/logs in lists/streams → LZ4 (mass ingest, low latency).

- Backups/snapshots (RDB/AOF out of the hot path) → Brotli/LZMA/Bzip2.

- Already-compressed binaries (images, ZIP) → Identity (don’t compress).

Anti-patterns & gotchas

- Over-compression: high Zstd/LZMA levels ↑CPU and ↑p99 with tiny byte gains.

- Small-key overhead: for < 1 KiB values, header + CPU usually loses.

- Double compression: detect and skip.

- Fragmentation: very large objects (> 512 KiB) can pressure the allocator; consider chunking.

- Pipelines: compress at the edge (API) but not in internal jobs if the payload is already compressed.

Final recommendations

- Modern default: Zstd-3 with 2–4 KiB threshold and a feature flag to fall back to LZ4 if p99 rises.

- Extreme latency: LZ4 frames, avoid exotic levels.

- Compatibility: Gzip/Zlib where the ecosystem requires it.

- Persistence: compress RDB/backups; keep AOF simple for minimal replay overhead.

Sources (recommended reading)

- https://www.dragonflydb.io/blog/redis-8-0-vs-valkey-8-1-a-technical-comparison

- https://www.dragonflydb.io/guides/valkey-vs-redis

- https://www.logicmonitor.com/blog/redis-compression-benchmarking

- https://dev.to/konstantinas_mamonas/which-compression-saves-the-most-storage-gzip-snappy-lz4-zstd-1898

- https://facebook.github.io/zstd/

- https://linuxreviews.org/Comparison_of_Compression_Algorithms

- https://quixdb.github.io/squash-benchmark/

- https://stephane.lesimple.fr/blog/lzop-vs-compress-vs-gzip-vs-bzip2-vs-lzma-vs-lzma2xz-benchmark-reloaded/

- https://cran.r-project.org/web/packages/brotli/vignettes/brotli-2015-09-22.pdf

- https://onidel.com/redis-valkey-keydb-vps-2025/

- https://django-valkey.readthedocs.io/en/latest/configure/advanced_configurations/

- https://experienceleague.adobe.com/en/docs/commerce-operations/implementation-playbook/best-practices/planning/valkey-service-configuration