Docker has officially announced the General Availability (GA) of Docker Model Runner (DMR), its solution to manage, run, and distribute AI models locally—using the same commands, workflows, and policies teams already use with Docker. The goal is straightforward: pull, run, and serve models packaged as OCI artifacts, expose them via OpenAI-compatible APIs, and wire everything into Docker Desktop, Docker Engine, Compose, and Testcontainers without adding new tools or security exceptions.

What is DMR and what problem does it solve?

Docker Model Runner turns models into first-class artifacts inside the Docker ecosystem. It lets you:

- Pull models from Docker Hub (as OCI artifacts) or from Hugging Face if they’re in GGUF (in which case the HF backend packages them on the fly as OCI artifacts).

- Serve models through an OpenAI-style API, making it easy to integrate existing applications without rewriting clients.

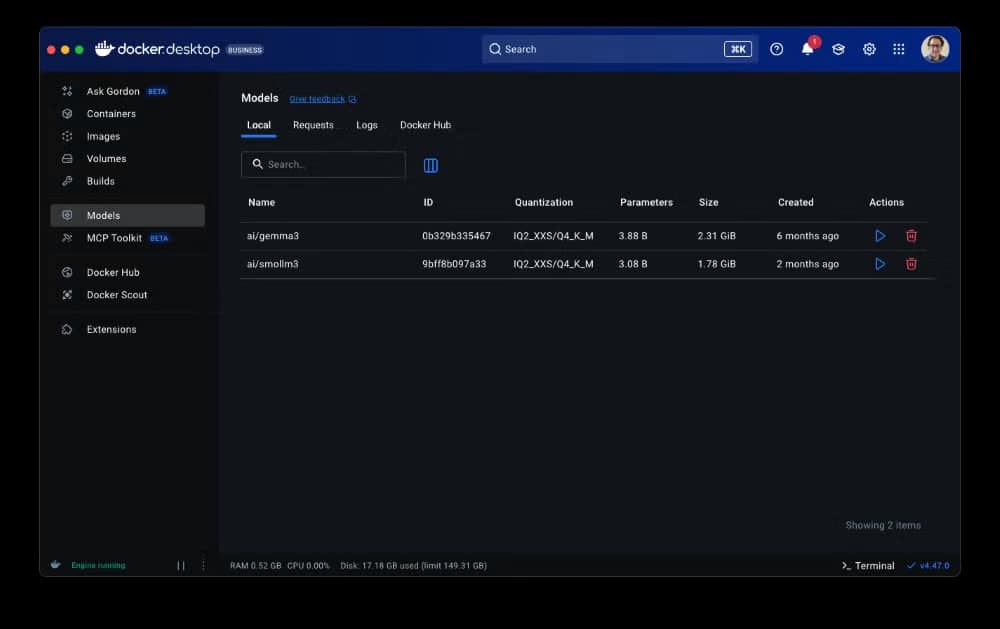

- Work from CLI and/or UI: either with Docker Desktop (guided onboarding and automatic resource handling) or Docker CE on Linux for automation and CI/CD.

The proposal reduces friction in the inner loop of development (try prompts, tools, and agents on your laptop/GPU), speeds up testing in pipelines, and lets you decide calmly when to move to hybrid deployments (local + cloud).

Eleven capabilities that have resonated with the community

- Built on

llama.cpp: solid baseline today, with flexibility to add more engines in the future (e.g., MLX or vLLM). - Cross-platform GPU acceleration: Apple Silicon on macOS, NVIDIA on Windows, and ARM/Qualcomm acceleration managed by Docker Desktop.

- Native Linux support: run with Docker CE, ideal for automation, CI/CD, and production workflows.

- Dual CLI/UI experience: from the CLI or the Models tab in Docker Desktop, with guided onboarding and automatic RAM/GPU allocation.

- Flexible distribution: pull/push OCI-packaged models via Docker Hub, or pull directly from Hugging Face (GGUF).

- Open source and free: lowers the barrier to entry and eases enterprise adoption.

- Isolation and control: runs in a sandboxed environment; security options (enable/disable, host-side TCP, CORS) to fit corporate guardrails.

- Configurable inference: context length and

llama.cppflags, with more knobs coming. - Built-in debugging: request/response tracing, token inspection, and library behavior visibility to optimize apps.

- Integrated with the Docker ecosystem: works with Docker Compose, Testcontainers, and Docker Offload (cloud offload).

- Curated model catalog: a selection on Docker Hub, ready for development, pipelines, staging, or even production.

Why it fits in the enterprise

DMR doesn’t require new security or compliance exceptions: it reuses private registries, policy-based access controls (e.g., Registry Access Management on Docker Hub), auditing, and traceability already deployed by platform teams. Packaging models as OCI artifacts also enables deterministic versioning, environment promotion, and air-gapped workflows when needed.

For teams already standardized on Compose and Testcontainers, DMR adds the inference link without breaking the “Docker way”: what you test locally becomes infrastructure as code and runs the same in CI/CD.

Where it shines: representative use cases

- Inner loop with local GPU: test prompts and tool calling with hardware acceleration on your laptop (Apple Silicon/NVIDIA) without wrestling with Python envs.

- Reproducible CI/CD: treat models as OCI artifacts, pin versions/hashes, and run deterministic tests with Testcontainers.

- Privacy and sensitive data: infer inside the perimeter during design/testing; decide when to offload to the cloud later.

- Agentic apps: build agents that use DMR as the model backend and deploy them with Compose, integrating other Docker stack pieces easily.

What’s coming next

- Smoother UX: richer chat-like rendering in Desktop/CLI, multimodal in the UI (already available via API), improved debugging, and more configuration options.

- More inference engines: support for popular libraries/engines (e.g., MLX, vLLM) and advanced per-engine/model config.

- Independent deployment: ability to run Model Runner without Docker Engine for production-grade scenarios.

- Frictionless onboarding: step-by-step guides and sample apps with real use cases and best practices.

- Up-to-date catalog: new models landing on Docker Hub and becoming runnable via DMR as soon as they’re publicly released.

Limitations and trade-offs to keep in mind

- OpenAI API compatibility: already covers most cases; coverage will expand iteratively.

- Acceleration and drivers: review platform requirements (NVIDIA drivers on Windows, Apple Silicon on macOS, Linux setup) before standardizing.

- Ergonomics vs. isolation: local GPU usage and host bindings may require security adjustments that Docker plans to harden with deeper sandboxing.

How to get started (without reinventing your stack)

- Install/update Docker Desktop (or use Docker CE on Linux).

- Enable DMR from settings or the CLI.

pulla model (Docker Hub/OCI or Hugging Face/GGUF) and serve it via the OpenAI-compatible API.- Declare it in Compose and bring it to CI/CD with Testcontainers for reproducibility.

Frequently Asked Questions

Is DMR compatible with the OpenAI API?

Yes. It exposes OpenAI-compatible endpoints, allowing you to plug in existing apps without changing clients or SDKs.

Which formats and registries are supported for distributing models?

OCI artifacts (in Docker Hub or any OCI-compatible registry) and GGUF from Hugging Face, transparently repackaged as OCI.

Which platforms and GPUs does it work with?

macOS (Apple Silicon), Windows (NVIDIA GPU), and Linux (CPU/GPU with Docker CE). Check drivers and compatibility per OS.

What’s on the roadmap post-GA?

Better UX (including multimodal in the UI), richer debugging, more engines (MLX, vLLM), advanced configuration, and the option to deploy independently from Docker Engine.

Sources

- Docker — Docker Model Runner: Now Generally Available (GA): https://www.docker.com/blog/announcing-docker-model-runner-ga/

- Official docs — Docker Model Runner: https://docs.docker.com/ai/model-runner/

- Docker — Behind the scenes: How we designed Docker Model Runner and what’s next: https://www.docker.com/blog/how-we-designed-model-runner-and-whats-next/