Dropbase is making waves among developers with a clear promise: build real web applications—fast—with help from AI, without giving up control of the codebase or data. The open project positions itself as a practical way to ship internal tools, admin panels, billing dashboards and other line-of-business apps that connect to databases and third-party services. The pitch is straightforward: pair a drag-and-drop builder with an AI that generates code the team can review and edit, so the result is maintainable software rather than a throwaway prototype.

The target audience is explicit. Instead of chasing the broad low-code/no-code market, Dropbase speaks to engineers who like speed but insist on code quality and portability. Existing visual platforms can be productive in the early days, yet they often lock logic inside black boxes that are hard to migrate or extend later. Dropbase takes the opposite route. It uses AI to scaffold production-grade apps while keeping everything in plain sight: a built-in web framework, pre-built UI components and a runtime that lives inside the user’s repository.

An AI developer that doesn’t hide the code

The core of the product is an “AI developer” experience tuned for internal apps. Instead of generating isolated snippets, Dropbase emits full pieces of application code that developers can verify, modify and commit. This means teams can enforce their usual practices—code review, branching strategies, linters, CI pipelines—while enjoying faster time-to-value. If the business changes, the code can change with it. That balance between acceleration and governance is the theme that runs through the entire approach.

Local-first and self-hosted by default

A decisive difference is the self-hosted nature of the stack. Teams are expected to run Dropbase locally or inside their private infrastructure, not as a third-party managed service. That choice resonates with organizations that handle sensitive data or operate under strict compliance regimes: credentials, datasets and audit trails remain on infrastructure the company controls. It also avoids the common trade-off where speed today becomes vendor lock-in tomorrow.

There is a practical cost: setup is in the hands of the engineering team. The recommended path is Docker (Docker Desktop is explicitly encouraged on Apple Silicon machines). After cloning the repository, developers make the startup script executable and launch the server. From there, a local web console exposes the app builder so a first project can be created in a browser.

What it can build today

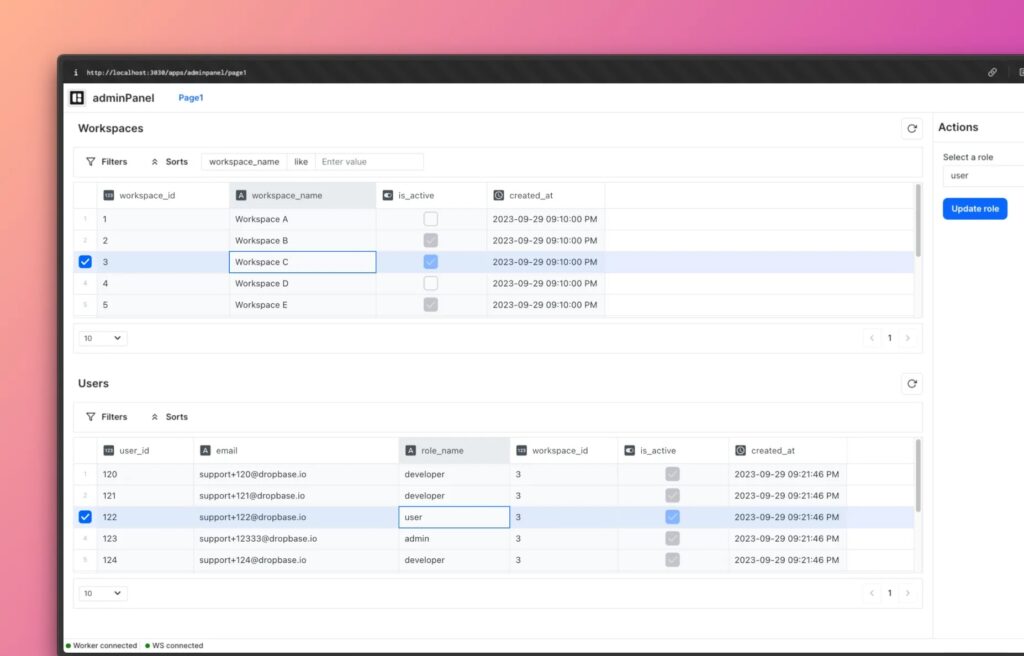

Dropbase showcases a set of concrete demos that mirror everyday needs in operations and back-office contexts:

- An Order Dashboard with integrations to Mailgun and Slack to retrieve customer orders and send updates via email or chat.

- A Salesforce Leads Editor with a spreadsheet-like interface to review and edit lead data.

- A HubSpot Contacts Editor for similar spreadsheet-style management of contacts.

- An Orders app with charts, emphasizing how to turn queries into visual dashboards for monitoring and reporting.

These examples illustrate the product’s sweet spot: reliable internal tools sitting on top of familiar data sources, where speed matters, yet traceability, permissions and maintainability matter too.

Built-in framework, pre-built components and Python extensibility

At runtime, Dropbase provides a web framework and a library of UI components so teams don’t have to assemble a front-end stack from scratch. Developers write or generate business logic with code and can import any needed libraries. The platform is grounded in Python, which acts as a lingua franca for extensions. Because the project embraces the Python ecosystem, teams can bring in packages from PyPI when adding data processing steps, API integrations or domain-specific functionality.

A notable design detail is that Dropbase resides in the user’s codebase. App folders are portable: they can be zipped and shared with other Dropbase users. Custom scripts and libraries can be imported naturally. This portability is important for long-term ownership: if the team ever decides to stop using the tool, the underlying code does not disappear behind a service wall.

How to get started

The initial setup follows a sequence that is easy to follow for any developer comfortable with Docker and Git:

- Pre-requisites: Install Docker. On Apple M-series chips, Docker Desktop is strongly recommended.

- Clone the repository:

git clone https://github.com/DropbaseHQ/dropbase.git - Start the server:

Give execution permission to the startup script and launch it:chmod +x start.sh./start.sh - Create the first app:

Open the builder athttp://localhost:3030/appsin a browser and click Create app.

From that point, the developer can assemble UI elements, connect data sources and let the AI propose code that can be accepted as-is or adapted to the team’s standards.

Enabling the AI features

AI assistance is optional and controlled by configuration. To turn it on, the team adds an OpenAI or Anthropic API key to the server.toml file and chooses a model (for example, gpt-4o). One operational note matters here: if the server reads environmental variables from the same file, those should appear before the LLM configuration block, because the LLM settings are defined as a TOML table and the order affects parsing. With the keys in place, the builder exposes AI-driven features that help scaffold code and components more quickly.

Worker configuration and secure integrations

Dropbase separates worker configuration from the web console by means of a worker.toml file. This is where teams define environmental variables for the worker process—API keys and access tokens for services like Stripe or Mailgun—and where they register database sources. The naming convention for databases is descriptive: database.<type>.<nickname>. For instance, a PostgreSQL source nicknamed my_source would include host, database, username, password and port.

The worker file also supports simple key/value entries for tokens such as:

stripe_key="rk_test_123"

mailgun_api_key="abc123"

Code language: JavaScript (javascript)This structure helps the AI infer which credentials are available without exposing them beyond the self-hosted environment. It also ensures that secrets are managed alongside infrastructure code in a way that is friendly to version control and reviews.

There are two additional operational details developers should note:

- When upgrading to server versions above 0.6.0,

worker.tomlmust live in the workspace directory. - The built-in demo expects a SQLite entry named

database.sqlite.demoto be present inworker.toml. Without it, demo features may not load correctly.

Why local-first matters for many teams

The local-first, self-hosted posture is more than an architectural preference. It speaks to three recurring concerns in modern software operations:

- Privacy and compliance. Sensitive datasets—customer orders, financial records, medical metadata—often cannot leave the organization’s boundary. Running the builder and worker locally ensures that both data and credentials stay inside company-controlled networks.

- Operational transparency. Because the code is visible and lives in the repository, teams can audit what the AI generated, add tests, enforce coding standards and align the app with existing SRE practices.

- Portability and future-proofing. App folders are portable; logic isn’t trapped behind a vendor-defined backend. If priorities shift, the code can be refactored, moved or even rewritten with less friction than would be expected in a conventional no-code platform.

What it feels like to use for day-to-day internal tools

The workflows Dropbase highlights are the kind that consume countless engineering hours in most companies: build a table-like editor for operational teams, wire up a form with validation rules, expose a filtered view of orders with a chart, or push notifications into a Slack channel when a status changes. The platform’s promise is that all of this can be assembled with pre-built UI, Python hooks and declarative data sources, then accelerated by AI where it makes sense.

In practice, that yields a familiar pattern: engineers define data connections and permissions, the AI accelerates mundane scaffolding, and the team takes back control to finalize the edge cases that matter to the business. Over time, the payoff is not just raw speed; it is the maintainability that comes from owning the code that runs the operation.

Practical considerations for adoption

As with any self-hosted tool, success depends on a few basics:

- Reliable Docker environment. Ensuring consistent local or server-based Docker setups avoids false starts, especially on mixed fleets (e.g., Apple Silicon laptops and x86 servers).

- Credential hygiene. Keeping

server.tomlandworker.tomltidy, with secrets correctly scoped and documented, reduces operational surprises. - Version control discipline. Treating the generated code like any other application—branches, pull requests, tests—keeps quality high while still reaping AI’s speed advantages.

- Documentation for hand-off. Because Dropbase is designed for team ownership, documenting the structure of each app folder, the registered data sources and the purpose of worker variables smooths onboarding for new engineers.

None of this is exotic for professional teams, and that is the point: Dropbase aims to fit the way developers already work instead of inventing a separate universe of opaque configuration screens.

The bottom line

Dropbase positions itself where speed and stewardship meet. It offers an AI-assisted path to shipping internal tools quickly while preserving the fundamentals that keep systems healthy over years: readable code, version control, auditable configuration and data that never has to leave the company’s environment. For teams wary of traditional no-code lock-in, its combination of AI + Python + self-hosting is a compelling proposition. If the job at hand is to deliver useful internal software fast—without surrendering long-term control—this is a platform that deserves a serious look.

Frequently Asked Questions

How do you enable AI features in a self-hosted Dropbase setup?

Add an OpenAI or Anthropic API key and the preferred model to server.toml (for example, gpt-4o). Ensure any additional environmental variables appear before the LLM block, since the latter is defined as a TOML table and ordering matters. Once configured, the builder exposes AI-assisted code generation.

What are the minimum steps to get a local instance running and create a first app?

Install Docker (Docker Desktop is recommended on Apple M-series machines), clone the repository, make start.sh executable and run it. Then open http://localhost:3030/apps and click Create app to start building.

How are databases and third-party APIs connected without exposing secrets?

Use worker.toml to define tokens (for example, stripe_key, mailgun_api_key) and register data sources following the database.<type>.<nickname> pattern with host, database, username, password and port. Keep the file in the workspace directory on versions above 0.6.0. For the built-in demo, include a database.sqlite.demo entry.

What kinds of internal apps see the most benefit from Dropbase today?

Spreadsheet-style data editors for operations teams, dashboards with charts fed by SQL queries, and admin panels that combine validation, filtering and role-based permissions. Typical use cases include customer orders, CRM leads, contact databases and back-office workflows that need speed, traceability and maintainable code.