Bots are a constant presence on the Internet for any website. While some bots, such as those from Google or Bing, are essential for search engine visibility, others can become a real headache. So-called “bad bots” or malicious bots can slow down your site, consume excessive bandwidth, and even create security vulnerabilities.

The situation has become more complicated in recent years due to the rise of artificial intelligence, which has led to new scraping bots that collect information en masse to train machine learning models.

Identifying and Distinguishing Good Bots from Bad Bots

The first crucial step in protecting your website is to identify which traffic comes from bots. Tools such as Google Analytics, server log files, or specialized monitoring services can help you spot suspicious patterns: repeated access from the same IP, unusual frequencies, or behavior that doesn’t match real users.

What Methods Exist to Block Bots?

1. robots.txt: A First Filter, but Not Enough

The robots.txt file is the first line of defense, though its effectiveness is limited. It serves as a recommendation for search engines, indicating which areas of your site can or cannot be indexed. However, malicious bots usually ignore these instructions, so it’s not enough to prevent attacks or scraping.

2. .htaccess: Direct Blocking with Limitations

On Apache servers, the .htaccess file allows you to block access from certain IPs or suspicious user agents. This method is effective for specific cases, but it’s impractical against bots that constantly change their IP or spoof their identity. It also requires ongoing maintenance and some technical knowledge.

3. Firewalls and WAF: Advanced Protection

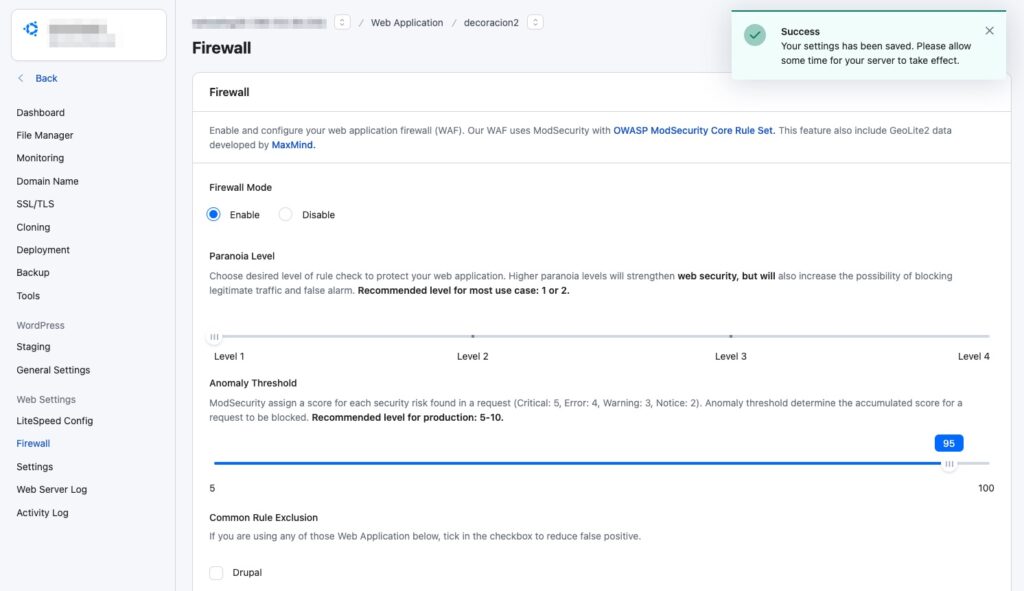

The most robust option is to implement a Web Application Firewall (WAF). Solutions like ModSecurity (included with RunCloud) allow you to filter traffic using customizable rules, detect abnormal behavior, and block attacks before they reach the server. Cloud platforms like Cloudflare offer network-level firewalls that can stop bots before they even reach your hosting.

With RunCloud, you simply access the control panel, select the “Firewall” section, and adjust parameters such as Paranoia Level and Anomaly Threshold, gradually increasing the protection level as needed. You can also create specific rules by IP, country, cookie, or user agent.

4. Other Recommended Measures

- Regularly reviewing logs to detect new threats and attack patterns.

- Using blacklists and automated rules to block known bots.

- Regularly updating firewall rules, as bots evolve and adopt new evasion strategies.

What Not to Block

It’s essential not to block the main search engine bots (Googlebot, Bingbot, etc.), as this would severely impact your site’s visibility in search engines. The challenge is to find the right balance between protection and accessibility.

Conclusion

Malicious bot traffic is a reality that can affect any website, regardless of its size. Investing in an advanced firewall, regularly reviewing activity, and adapting your defenses to changing threats is the best way to protect your resources and ensure your site remains available.

With the advance of artificial intelligence, it’s essential to stay up to date and be proactive in managing web security. Only then can you curb the spread of unwanted bots without affecting user experience or online visibility.