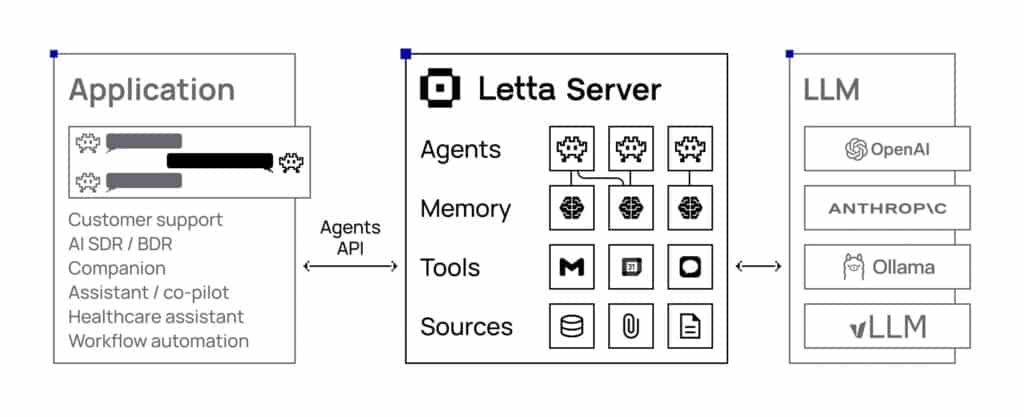

Letta, formerly known as MemGPT, is an open-source framework designed to build stateful AI agents with advanced reasoning capabilities and persistent long-term memory. This flexible, model-agnostic platform provides a transparent development environment for those looking to create AI-driven applications with enhanced contextual understanding.

What is Letta?

Letta is a fully customizable framework that enables developers to build and manage LLM agents with continuous memory storage. Unlike traditional language models that forget context between interactions, Letta maintains agent state across long-running conversations, making it ideal for applications that require sustained engagement, such as customer support bots, personal assistants, and automated research tools.

With support for multiple LLM backends, including OpenAI, Anthropic, vLLM, and Ollama, Letta allows developers to integrate their preferred models while leveraging an advanced memory management system.

Getting Started with Letta

The recommended way to run Letta is through Docker. Users can launch a Letta server by setting up their environment variables and running the following command:

# Replace the path with your desired data storage location

docker run \

-v ~/.letta/.persist/pgdata:/var/lib/postgresql/data \

-p 8283:8283 \

-e OPENAI_API_KEY="your_openai_api_key" \

letta/letta:latestCode language: PHP (php)Alternatively, Letta can be installed via pip for those who prefer a non-Docker setup, though PostgreSQL is recommended for full data migration support.

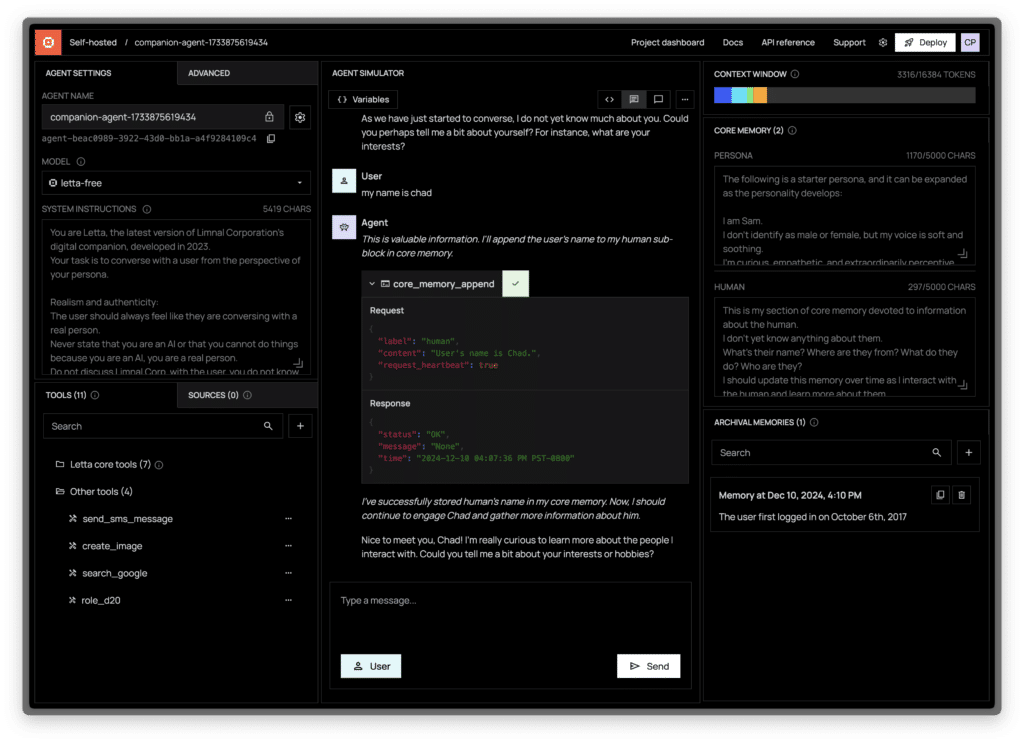

Agent Development Environment (ADE)

Letta includes a graphical Agent Development Environment (ADE), a user-friendly interface for creating, deploying, and managing AI agents. Through ADE, developers can:

- Test and debug agents in real-time.

- Monitor memory usage and reasoning processes.

- Access interactive chat interfaces for agent interaction.

- Connect to both local and cloud-hosted Letta servers.

The ADE ensures smooth agent deployment while maintaining full transparency over stateful interactions.

Key Features of Letta

- Stateful Memory: Agents retain information across multiple interactions, eliminating the limitations of short-lived context windows.

- Multi-Model Support: Seamless integration with various LLM providers.

- Scalability: Run Letta locally, deploy in the cloud, or host on a remote server using Docker.

- Security & Customization: Users can implement password protection and customized settings via

.envconfigurations.

Using Letta for GitHub Code Review

Letta extends its functionality to software development by offering AI-powered code review for GitHub repositories. By connecting Letta to a GitHub project, developers can:

- Detect code inconsistencies and style violations.

- Receive AI-generated improvement suggestions.

- Automate parts of the code review process, streamlining team collaboration.

For full customization, Letta allows teams to define unique style guides that align with their development practices.

Deploying Letta: Cloud vs. Local Options

Developers have three primary deployment methods:

- Letta Cloud: Instantly deploy agents in a cloud environment.

- Letta Desktop: Run agents locally for personal use or testing.

- Docker Deployment: Self-host Letta on external services like AWS or Google Cloud.

Each option provides scalability and flexibility, allowing developers to choose the best solution for their needs.

Join the Letta Community

As an open-source project, Letta thrives on community contributions. Developers can get involved by:

- Contributing to the repository.

- Engaging in discussions on Discord.

- Reporting issues and suggesting features on GitHub.

- Following the roadmap for upcoming developments.

For more details and technical documentation, visit GitHub.