As the generative AI video space heats up, a new contender has emerged from China, and it’s open source. MAGI-1, developed by Sand AI, introduces a robust, autoregressive architecture that’s already proving competitive with top-tier closed models like OpenAI’s Sora, Google’s VideoPoet, and ByteDance’s Kling.

With 24 billion parameters, streamable inference capabilities, and scene-level control through chunk-wise prompting, MAGI-1 isn’t just a research demo — it’s an enterprise-ready framework for scalable, real-time video generation. Even more compelling? It’s fully open source and already live on GitHub.

What Makes MAGI-1 Different?

- Autoregressive chunk-based generation for improved temporal consistency

- 24-frame block denoising for better scalability and parallelism

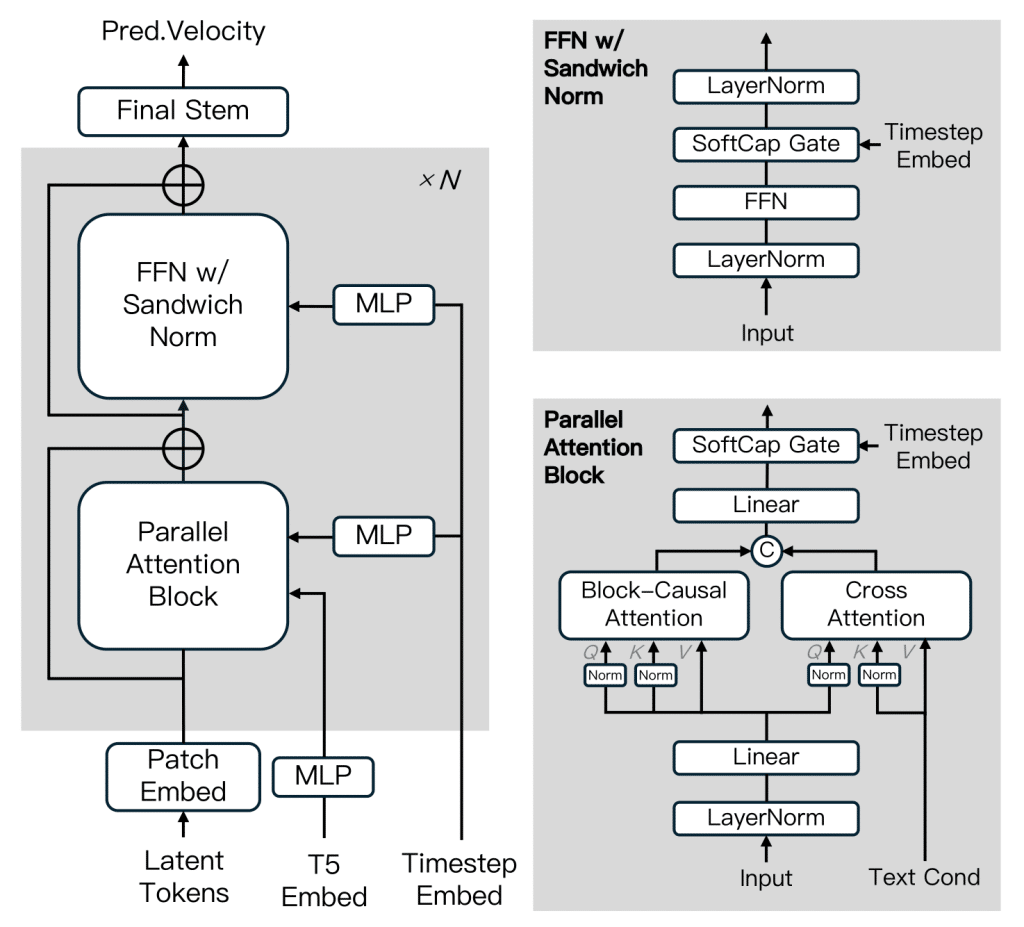

- Diffusion Transformer architecture optimized for speed, stability, and detail

- Chunk-wise prompts to control storytelling and scene transitions

- Streaming-ready pipeline for real-time inference applications

- Open-source code + pretrained models under Apache 2.0 license

Technical Comparison: MAGI-1 vs Sora vs Kling vs VideoPoet

| Model | Open Source | Architecture | Scene Control | Physics Accuracy (Physics-IQ) | Motion Quality | Streaming Inference |

|---|---|---|---|---|---|---|

| MAGI-1 | ✅ Yes | Autoregressive Diffusion Transformer | ✅ Chunk-wise prompts | 🟢 High (30.23 I2V / 56.02 V2V) | 🟢 Excellent | ✅ Supported |

| Sora | ❌ No | Diffusion + Transformer | ❌ Minimal | 🔴 Low (10.00) | 🟡 Good | ❌ Not supported |

| Kling | ❌ No | Transformer-based | 🟡 Moderate | 🟡 Mid (23.64) | 🟢 Good | ❌ Partially supported |

| VideoPoet | ❌ No | Mixed (Transformer + Autoregressive) | 🟡 Limited | 🟠 Below Average (20.30) | 🟡 Variable | ❌ Partially supported |

| Wan-2.1 | ✅ Yes | Diffusion-based | ❌ None | 🟠 Low (20.89) | 🟡 Basic | ❌ Not supported |

Note: Physics-IQ scores reflect temporal and spatial accuracy in video continuation tasks, essential for realistic physics-based animation and scene dynamics.

Quick-Start Guide: Running MAGI-1 Locally or via Docker

✅ Recommended Setup: Docker (Fastest)

docker pull sandai/magi:latest

docker run -it --gpus all --privileged --shm-size=32g \

--name magi --net=host --ipc=host \

--ulimit memlock=-1 --ulimit stack=6710886 \

sandai/magi:latest /bin/bash🛠️ From Source (with Conda)

# 1. Create environment

conda create -n magi python=3.10.12

conda activate magi

# 2. Install PyTorch

conda install pytorch=2.4.0 torchvision=0.19.0 torchaudio=2.4.0 pytorch-cuda=12.4 -c pytorch -c nvidia

# 3. Install dependencies

pip install -r requirements.txt

# 4. Install FFmpeg

conda install -c conda-forge ffmpeg=4.4

# 5. Install custom attention layer

git clone https://github.com/SandAI-org/MagiAttention.git

cd MagiAttention

git submodule update --init --recursive

pip install --no-build-isolation .Code language: PHP (php)🎬 Run Inference (Text → Video / Image → Video)

Image-to-Video (i2v):

bash example/4.5B/run.sh \

--mode i2v \

--prompt "A futuristic robot walking through a neon-lit alley" \

--image_path example/assets/image.jpeg \

--output_path results/robot_neon.mp4Code language: JavaScript (javascript)Text-to-Video (t2v):

bash example/4.5B/run.sh \

--mode t2v \

--prompt "A majestic eagle flying over snowy mountains at sunset" \

--output_path results/eagle_sunset.mp4Code language: JavaScript (javascript)Deployment Notes

| Model Version | Suggested Hardware |

|---|---|

| MAGI-1-24B | 8× NVIDIA H100 / RTX 4090 |

| MAGI-1-24B-distill | 4× H100 or 8× RTX 4090 |

| MAGI-1-4.5B | 1× RTX 4090 or A100 |

Final Thoughts

MAGI-1 marks a turning point in open source generative video, combining cutting-edge architecture with reproducibility, scalability, and fine-grained control. Its autoregressive chunking and Physics-IQ leadership make it a go-to framework for real-time applications, video research, or product integration.

Whether you’re building streaming pipelines, animation tools, or generative video services — MAGI-1 delivers where other models keep their secrets closed. With support for inference via Docker and source code, it’s not just for labs — it’s ready for production.

Verdict: A top-tier open-source generative video model with serious engineering behind it — and a real contender to reshape the AI video landscape.

Source: Noticias inteligencia artificial