Microsoft has just made a strong move in a key area for the next wave of AI applications: voice. The company has released VibeVoice-Realtime-0.5B, an open-source text-to-speech model that can generate audio in real time with latency of around 300 ms, designed to plug into terminals, backends, and interactive apps.

Unlike many “sealed” TTS services exposed only via cloud APIs, VibeVoice is launched with a clear focus: give technical teams a complete framework to build low-latency, multi-turn conversational voice experiences, with the option to self-host and fully control the infrastructure.

What does VibeVoice bring to technical teams?

For developers and system administrators, VibeVoice is interesting for several reasons:

- Real-time model: VibeVoice-Realtime-0.5B is optimized for streaming, generating the first chunk of audio in roughly 300 ms and supporting incremental text input—ideal for agents, assistants, and support bots.

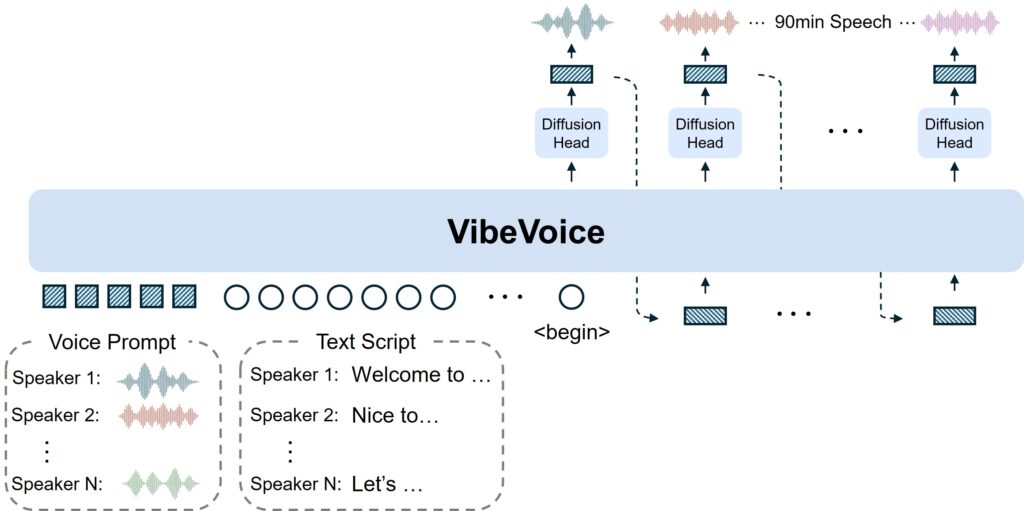

- Built for long sequences: The original VibeVoice framework is designed for conversations up to about 90 minutes and up to four speakers in its long-form variant, something rare in traditional TTS systems.

- Modern, modular stack: The system uses continuous audio tokenizers (acoustic and semantic) operating at just 7.5 Hz, which keeps audio quality while dramatically cutting compute cost for long sequences. On top of that, an LLM (based on Qwen2.5-1.5B in this release) handles context and dialogue flow, and a diffusion head adds the fine-grained acoustic detail.

- Open source and reproducible: Code and models are available on GitHub and Hugging Face under open licenses for research and development—key for teams that don’t want to depend entirely on closed cloud services.

Experimental multilingual voices, including Spanish

One of the most eye-catching announcements is the addition of experimental speakers in nine languages, including Spanish, plus German, French, Italian, Japanese, Korean, Dutch, Polish, and Portuguese. The idea is to provide ready-to-use speakers for multilingual scenarios and make it easier to test across markets.

It’s worth stressing something important for technical readers: the current release is primarily trained on English (and previously Chinese), and support for other languages is still experimental. That means prompts in these languages can occasionally produce odd or less natural outputs.

For prototyping, internal demos, or support tools where “good enough” is acceptable, these voices are often sufficient. For user-facing, production-grade products, teams should thoroughly test, tune expectations, and combine VibeVoice with other TTS systems where necessary.

Architecture aimed at agents, backends, and the terminal

VibeVoice fits neatly into the kind of applications development and operations teams are starting to deploy: AI agents, internal assistants, voice-enabled dashboards, monitoring systems with spoken alerts, and more.

Key integration points from an engineering perspective:

- Streaming via WebSocket: The repository includes examples for spinning up a WebSocket server to serve audio in real time, which makes it easy to connect to web UIs, mobile apps, or even terminal frontends.

- Clean separation of concerns: The model focuses on speech—no music, background ambience, or complex audio mixing. That keeps orchestration simple when combining it with game engines, audio mixers, or telephony stacks.

- Designed for your own infra: Because it doesn’t depend on a commercial API, VibeVoice can be deployed on-prem, in private clouds, or even in air-gapped environments, as long as you have sufficient GPU resources. For many sysadmins in regulated sectors (healthcare, finance, public sector), that’s a decisive difference.

Performance, costs, and where it fits in your stack

Using low-rate tokenization at 7.5 Hz and a ~0.5B-parameter model for the realtime variant, VibeVoice seeks a balance between latency and compute cost. It’s not a toy, but it’s also not an enormous model that only hyperscalers can realistically run.

In practice:

- On a mid-range GPU, you can expect interactive usage for a handful of concurrent users.

- On servers with multiple GPUs, you can scale toward virtual call centers, support assistants, or internal voice tools.

- In CPU-only environments, real-time performance will be significantly constrained—this is not the right choice if you’re limited strictly to CPU.

For ops teams, the upside is that you can treat it like any other internal service: Docker images, Kubernetes orchestration, resource limits, latency metrics, autoscaling policies, and so on.

Security, deepfakes, and usage control

Microsoft puts significant emphasis on risks and limitations. The VibeVoice repo was even temporarily disabled in 2025 due to misuse, and re-opened with more guardrails.

Some highlights that matter for technical governance:

- Deepfake mitigation: Voice prompts are provided in an embedded format rather than letting users upload arbitrary voice samples, which reduces the risk of one-to-one cloning of real voices. For custom voices, you need to work directly with Microsoft.

- Intended for R&D first: The documentation explicitly advises against dropping VibeVoice straight into commercial environments without further testing, due both to model bias/accuracy issues and the potential for abuse.

- Regulatory compliance: Teams are expected to label AI-generated audio and comply with local laws on data protection, IP, and voice fraud.

For sysadmins, this translates into: clear access policies, per-project isolation, robust logging of requests, and—where appropriate—rate limiting and usage monitoring.

Why VibeVoice matters for the ecosystem

Beyond the hype, VibeVoice fits into a clear trend: AI agents are moving from text-only interfaces to real-time voice interfaces that hook into corporate systems, developer tools, and internal workflows.

A major vendor like Microsoft open-sourcing a framework of this calibre, with a focus on low latency and long-form dialogue, sends a strong signal to the open ecosystem:

- it reduces exclusive dependence on SaaS TTS APIs,

- it lets platform teams build their own voice layer tailored to their requirements,

- and, crucially, it makes it possible to experiment safely with callbots, internal assistants, spoken admin consoles, and DevOps tools driven by natural conversation.

For developers and system administrators, the message is simple: voice is no longer just a flashy demo extra—it’s becoming another core component of the AI infrastructure stack. VibeVoice offers a powerful, auditable, and extensible starting point to build on top of it… as long as it’s deployed with solid security practices, realistic performance expectations, and clear metrics around cost and reliability.