How far can a Reddit moderator’s power stretch when they control the industry’s main watering hole? The dispute pitting Codesmith, a coding bootcamp that once reported $23.5 million in revenue, against Michael Novati, cofounder of Formation and moderator of r/codingbootcamp, offers an unsettling answer: very far. According to a detailed account from the affected side, more than a year of daily negative threads, selective comment removals, and strategic bans allegedly reshaped the public narrative around Codesmith—nudging prospects to see it as a “cult” or a “scam.” The business impact, by this telling, was severe: an 80% revenue decline, with roughly half attributed to the reputational drag amplified by Reddit, Google, and LLM-generated summaries.

This report lays out what’s alleged, what’s plausible, how Reddit’s rules intersect with conflicts of interest, and why the story matters to any brand whose reputation lives on the first page of Google—or inside a chatbot’s “authoritative” answer.

The Allegation: Controlling the Industry’s Watering Hole

At the center is r/codingbootcamp, the subreddit where prospective students seek candid reviews and comparisons. There, Michael Novati—a high-profile engineer turned bootcamp cofounder—reportedly consolidated practical control over the daily flow of posts, questions, and testimonials. The account claims that:

- Positive posts about Codesmith were deleted, while some posters were banned.

- Daily negative threads were permitted or authored—a streak of 487 consecutive days is cited, with 425 negative mentions overall (0.87 per day).

- Rhetoric compared Codesmith to highly charged themes (e.g., “cult”) without stating outright accusations—planting associations while maintaining deniability.

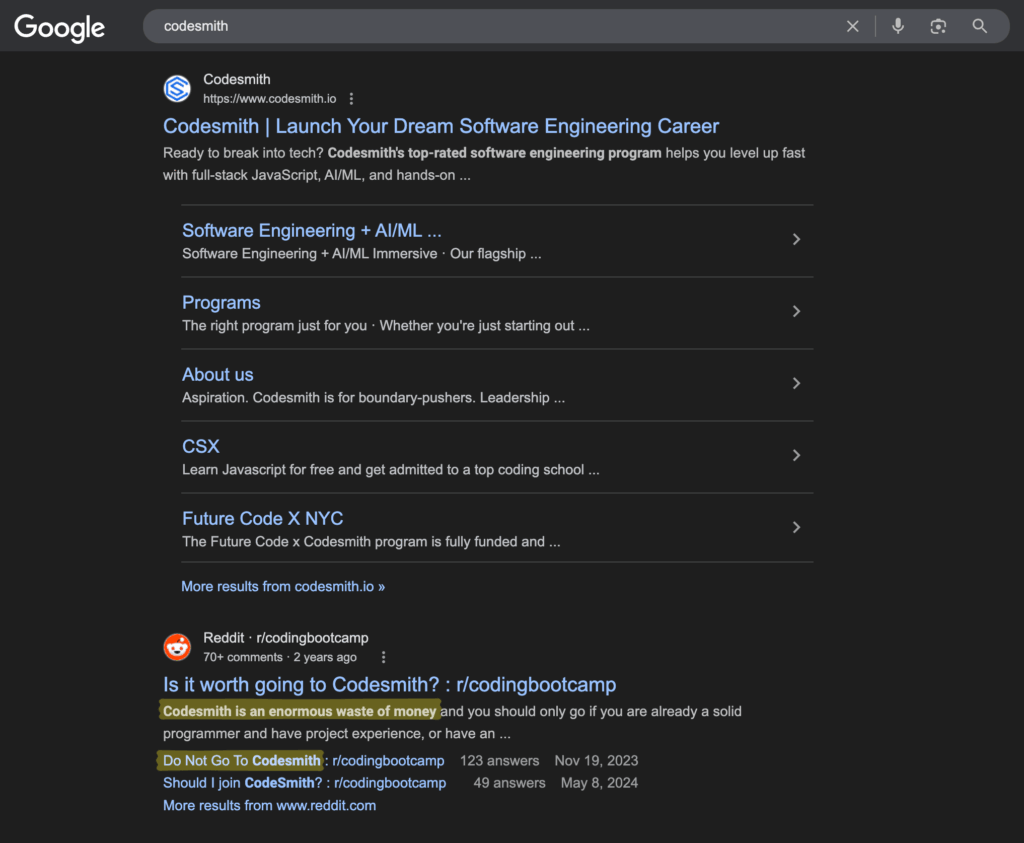

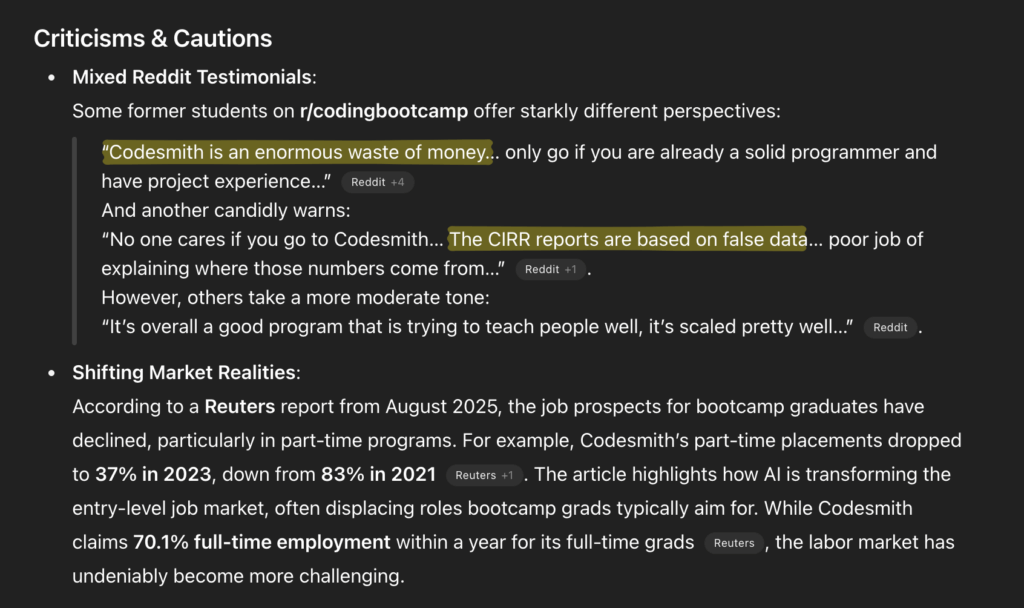

The ripples didn’t stop at Reddit. With Reddit content surfaced prominently in Google results and used as grounding for LLM answers, those threads began appearing for brand searches and within “neutral” AI summaries. For a high-ticket purchase like a bootcamp, introduced doubt is often enough to derail conversions.

What’s Plausible—and What’s Not the Whole Story

Some elements are straightforward: the subreddit is active; moderation power is real; and the moderator is also a cofounder in the same sector. Other points—like the exact slice of revenue loss attributable to Reddit versus a broader bootcamp market downturn—are harder to isolate. Even the affected side notes a split: roughly half of the decline tied to the barrage, and the rest to market conditions.

Reddit’s Moderator Code of Conduct: Does It Apply Here?

Reddit’s rules bar compensation for moderation actions, a guardrail against pay-to-play. They do not automatically bar moderators who work in the same industry as their community. That means the core question is less about a technical rule breach and more about governance and trust: when a moderator has a clear conflict of interest, do users understand that context? Are moderation choices transparent and even-handed? The policy gap is exactly where perception and legitimacy can fray.

Why This Story Matters Beyond Bootcamps

- Three Channels, One Bias. Control of a key subreddit can shape Reddit discourse, Google rankings, and LLM outputs at once.

- LLM Amplifier Effect. If search and chatbots ingest Reddit threads as trusted signals, a skewed subreddit becomes a reputational force multiplier.

- Asymmetric Volume. Daily posting wins by repetition. Debunking point-by-point doesn’t scale.

- Sector Risk. Any field with a central subreddit and light oversight is exposed to similar tactics.

What the Numbers Say About Codesmith

Setting the crossfire aside, outcome data that the company points to for 2023–2024 still shows placement rates around the 60–70% range at 12 months, with median salaries near $110k–$120k, depending on track—figures that remain competitive in a tougher entry-level market. None of this renders criticism invalid; it does complicate absolutist claims that “it’s all smoke.”

A Playbook for Companies Facing a “Reddit Attack”

1) Map SEO/LLM risk.

Audit brand-term SERPs and chatbot answers (“is X legit?”, “X reviews”, “X scam?”). Identify the source subreddits, patterns, and recurring allegations.

2) Publish citable facts.

Create canonical pages with audited metrics, clear methodology, and straightforward explanations. Use structured data so search and LLMs can ingest the right context.

3) Build community outside the fiefdom.

Run your own forum/Discord/Slack with transparent rules. Alumni stories on controlled but open channels diversify where prospects encounter social proof.

4) Escalate with a case file.

If moderation crosses lines (harassment, doxxing, undisclosed interests), document thoroughly and escalate to Reddit admins. The bar is high, but not nonexistent.

5) Respond without feeding the loop.

Pick where and when to answer. Avoid endless back-and-forth in hostile threads; instead, point to living FAQs and data pages in a professional tone.

6) Train your team.

Teach digital hygiene: no personal disclosures, no reactive pile-ons, and a clear pathway for legal or platform remedies when needed.

The Larger Governance Problem

As Reddit deepens ties with major platforms, its communities increasingly shape search and AI. This case exposes a structural tension: volunteer moderation + industry conflicts + distribution power in Google/LLMs. The moderator rulebook polices bribes; it does not fully address conflicted control or its downstream effects. In practice, trust hinges on responsible behavior—or on intervention when abuse is documented.

Bottom Line

The Codesmith saga is less a feud between two bootcamps than a primer in modern reputation risk: relentless volume, control of the conversation hub, and a cross-channel amplifier via search and generative AI. A moderator with a stake in the market may not violate a narrow reading of platform rules, yet still reshape reality for prospects at scale. The practical response blends verifiable data, channel diversification, and reputation literacy—until platform governance catches up.

FAQs

Does moderating a subreddit in your own industry automatically break Reddit’s rules?

No. The bright line is compensation for moderation, not employment in the field. The issue is conflict of interest and whether moderation is exercised transparently and fairly.

Why does Reddit weigh so heavily in Google and LLMs?

Reddit threads routinely surface for intent-rich searches, and chatbots often treat them as high-signal human context. That makes subreddit narratives disproportionately influential.

How can a company counter subreddit-driven bias without making it worse?

Publish audited facts in machine-readable formats, build independent communities, and reply selectively with calm, linkable references. Avoid oxygenating hostile threads.

Are Codesmith’s outcomes “too good to be true”?

Recent cohorts show solid but market-tempered placement rates and salaries. The market is tougher than 2019–2021, but the figures remain in a competitive band for the category.