The boom in large language models, AI APIs and DevOps tools has made one business model increasingly common: pay-per-use. Tokens consumed, API calls, compute minutes, pipelines executed… everything gets counted. But behind that seemingly simple “we just count events” lies a very real problem: how to do it at scale, in real time, without building a fragile Frankenstein of internal services.

That’s the gap OpenMeter wants to fill. It’s an open-source platform focused on metering for usage-based billing, aimed especially at AI, API and DevTool companies. It’s not a full billing suite; it’s an infrastructure layer that tries to do one thing extremely well: measure consumption reliably.

A usage counter for the AI economy

OpenMeter’s value proposition is easy to explain:

your applications send events such as:

- “inference request completed”

- “API query executed”

- “CI/CD job finished”

OpenMeter ingests, aggregates and stores those events, and exposes a REST API to query usage by customer, product, plan or metric. That data can then be wired directly into:

- Payment platforms like Stripe or other billing systems

- Internal dashboards for product, finance or operations

- Rate-limiting and automatic usage caps

- Internal cost allocation across teams or projects

In a world where every AI token and every millisecond of GPU time costs money, counting correctly is almost as important as generating good outputs. That’s where the project aims to stand out compared with the classic usage_events table that starts to crumble as soon as the product grows.

Architecture built for high-volume workloads

Unlike many legacy solutions, OpenMeter is designed from the ground up for modern AI-driven data flows:

- It uses Apache Kafka as its stream-processing backbone, well suited to high-volume, real-time event pipelines.

- It relies on ClickHouse for analytics, optimised for fast queries over large amounts of time-series and usage data.

On top of that, it offers a developer-friendly interface:

- A well-defined REST API for ingesting and querying usage

- Official SDKs for JavaScript, Python and Go

- The ability to generate additional clients from its OpenAPI spec

For teams already working with microservices, event-driven architectures and data pipelines, OpenMeter fits in as just another internal service, without forcing a rebuild of the entire stack.

Open source, without vendor lock-in

One of the hot points in the AI ecosystem is avoiding having a critical component like metering locked away in a proprietary SaaS that is hard to leave. OpenMeter takes the opposite approach:

- The project is open source, under the Apache 2.0 license.

- It can be self-hosted on a company’s own infrastructure:

- A quickstart with

docker composespins up Kafka, ClickHouse and the OpenMeter API in minutes. - There’s also a Helm chart for deploying on Kubernetes.

- A quickstart with

- For teams that don’t want to manage that layer themselves, there’s a cloud version (OpenMeter Cloud) with a public comparison against the self-hosted option.

That dual model fits how many AI companies operate: start experimenting with a managed service, then move to a self-hosted deployment when event volumes or compliance requirements demand it.

Use cases for AI and API products

OpenMeter can be used in many industries, but its positioning is clear: AI platforms, APIs and developer tools. Some typical scenarios:

- Generative AI platforms

- Charge per request, per token, per second of model runtime or per embedding query.

- Implement hybrid pricing models (fixed + variable) with usage limits enforced in near real time.

- Commercial APIs

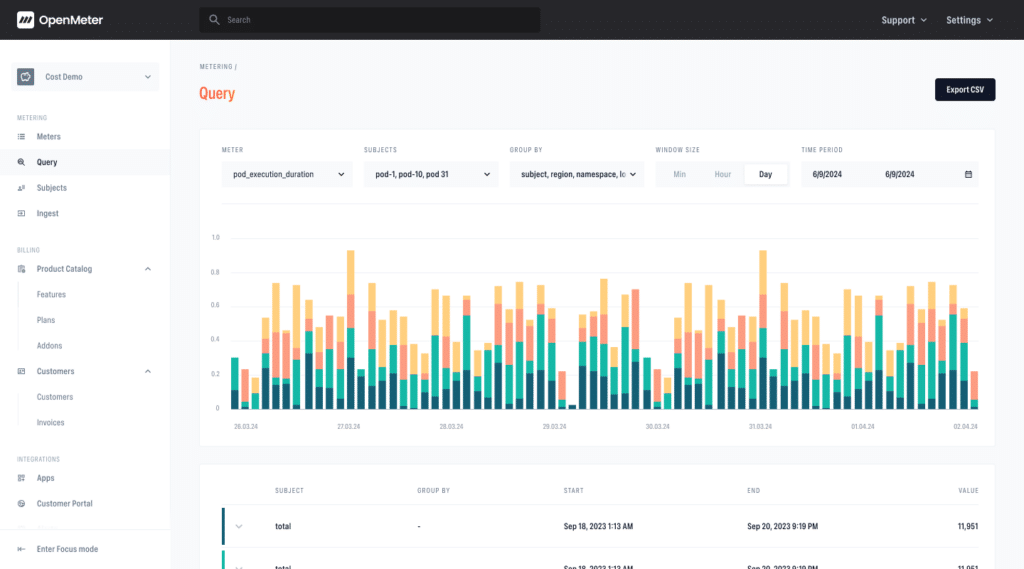

- Track calls by endpoint, client, region or operation type.

- Power dashboards and alerts for customers based on actual consumption.

- Infrastructure and observability tools

- Meter Kubernetes pod execution time, logs processed or pipelines completed.

- Allocate infrastructure costs internally to engineering teams or business units.

- Developer-focused SaaS

- Add usage-based pricing when the internal “usage” table starts to become a bottleneck and a source of billing errors.

The project’s repository includes concrete examples: measuring Kubernetes pod runtime, usage-based billing with Stripe, or log-driven metering, which helps teams replicate already-tested patterns instead of starting from scratch.

Low latency, high traceability

In a usage-based business model, it’s not enough to have the data “eventually”. OpenMeter is designed to provide:

- Near real-time latency for usage data, which is crucial for:

- Live dashboards

- Customer alerts when they approach or exceed limits

- Automatic throttling or shutdown when usage caps are hit

- A single, auditable source of truth for consumption events, which helps with:

- Billing disputes (“Why did I pay this invoice?”)

- Internal profitability analysis by account, feature or region

- Governance and cost control in AI infrastructure

In an environment where GPU and storage costs can quickly spiral, this “usage ledger” becomes another pillar of financial and technical governance.

Community-driven infrastructure

Beyond the technology itself, OpenMeter is leaning heavily on its community:

- A Discord server for support and technical discussion

- A public list of adopters already using the platform in production

- A blog and examples to keep track of the project’s evolution

That community aspect aligns with a growing trend among AI companies: open, composable infrastructure, instead of relying solely on opaque, all-in-one platforms.

A foundational layer for monetising AI

As AI services mature, usage-based pricing is likely to become the norm rather than the exception. And, just as happened with logging or monitoring, it’s likely that specialised infrastructure components will emerge as de facto standards.

OpenMeter wants to be exactly that on the metering side:

a dedicated, auditable and extensible layer that allows AI and API companies to offload a complex infrastructure problem without losing control over their data and business model.

For teams about to build their own metering system from scratch, the question is no longer just “Can we build this?”, but rather:

“Does it make sense to build it ourselves when there’s an open project designed specifically for this job?”