In an era where Large Language Model (LLM) applications are becoming central to businesses and AI-driven services, Comet has released Opik, an open-source framework designed to help teams debug, evaluate, and monitor their LLM-based systems efficiently at scale.

From simple RAG chatbots to advanced agentic pipelines, Opik enables developers and enterprises to build LLM applications that are not only faster and more reliable, but also cost-optimized for real-world deployment.

🔥 What is Opik?

Opik is a comprehensive tracing, evaluation, and monitoring platform for LLM applications, offering:

- Development Tools

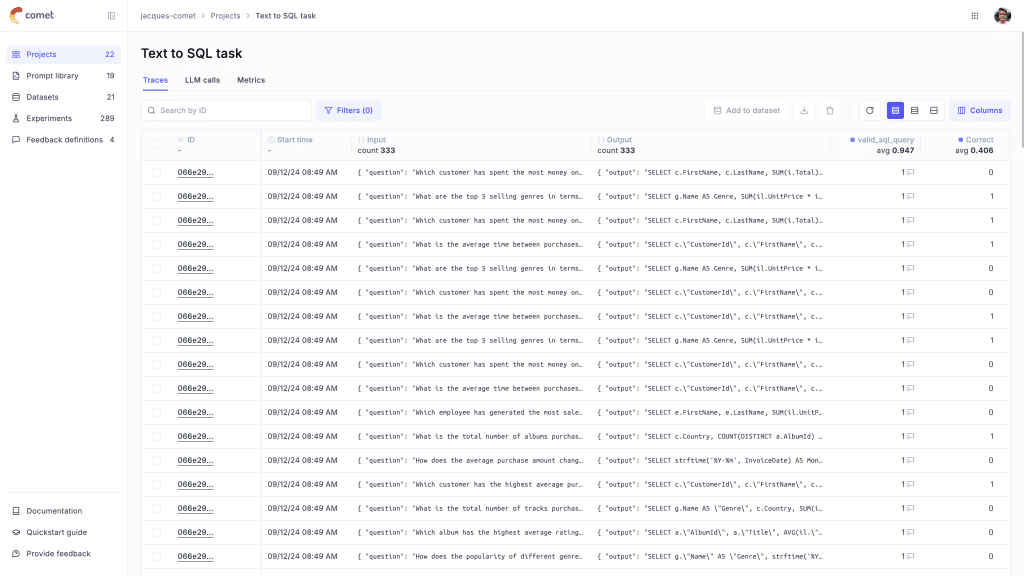

- Tracing: Capture all LLM calls and traces across development and production environments.

- Annotations: Log user feedback and manual evaluations for fine-tuning.

- Playground: Experiment with different prompts and models interactively.

- Evaluation Framework

- Automated Evaluations: Benchmark LLM applications using datasets and experiments.

- LLM-as-a-Judge: Automate the detection of hallucinations, evaluate RAG performance, and assess moderation with AI-based metrics.

- CI/CD Integration: Run automated evaluations directly within your CI/CD pipelines via PyTest.

- Production Monitoring

- Massive Scale: Handle up to 40+ million traces per day, making it ideal for heavy production workloads.

- Dashboards: Visualize token usage, trace counts, and feedback scores.

- Online Evaluation: Score production traces automatically with metrics to catch regressions early.

🛠️ Installation Options

Opik supports multiple deployment modes:

| Method | Instructions |

|---|---|

| Cloud-Hosted (Recommended) | Sign up for a free account on Comet.com |

| Self-Hosted (Docker Compose) |

git clone https://github.com/comet-ml/opik.git

cd opik

./opik.shCode language: PHP (php)Accessible at localhost:5173 | | Kubernetes Deployment | Follow Kubernetes deployment guide in the documentation |

Once installed, configure the Python SDK with:

pip install opik

opik configure --use_local🧩 Integrations

Opik provides out-of-the-box integrations with popular frameworks and providers, including:

- OpenAI

- LangChain

- Haystack

- Anthropic

- Gemini

- Groq

- Ollama

- DeepSeek

- Bedrock

- LiteLLM

- Guardrails

- LlamaIndex

- LangGraph

- Predibase

- Ragas

- CrewAI

- and more…

You can also log traces manually using the simple @track decorator:

import opik

@opik.track

def my_llm_function(user_query: str) -> str:

return "Hello, world!"Code language: CSS (css)⚖️ Built-In Evaluation Metrics

Opik offers a variety of built-in evaluation tools:

- LLM-as-a-Judge Metrics:

Automatically evaluate outputs for hallucinations, relevance, consistency, and more. - Heuristic Metrics:

Fast checks for quality without relying on expensive external LLM calls.

Example:

from opik.evaluation.metrics import Hallucination

metric = Hallucination()

score = metric.score(

input="What is the capital of France?",

output="Paris",

context=["France is a country in Europe."]

)

print(score)Code language: JavaScript (javascript)🚀 Key Benefits of Opik

- Production-Ready: Supports enterprise-level workloads with monitoring at cloud scale.

- Customizable: Build your own evaluation metrics if needed.

- Extensible: Add new integrations easily for any framework not yet covered.

- CI/CD Friendly: Integrate into any continuous testing pipeline.

- Open-Source Flexibility: Modify and extend without vendor lock-in.

⭐ Final Thoughts

As LLM-based applications move from prototypes to production, Opik fills a critical gap: providing visibility, reliability, and evaluation at scale.

Whether you’re building conversational agents, search-augmented generation systems, or complex autonomous workflows, Opik ensures you can trust, optimize, and continuously improve your models.

For complete installation instructions, integrations, and examples, visit:

🔗 https://github.com/comet-ml/opik