Now deployable on European data centers like Stackscale in Spain and the Netherlands, PrivateGPT offers full data sovereignty with local LLM-powered document interaction

PrivateGPT, the open source project developed by the team behind Zylon, has emerged as one of the most advanced solutions for implementing generative AI in privacy-critical environments. With over 56,000 followers on GitHub, it allows organizations to interact with their own documents using Large Language Models (LLMs) —without sending any data to the cloud.

Capable of running entirely offline, PrivateGPT is designed for industries such as healthcare, legal, government, and manufacturing, where data residency and compliance requirements rule out traditional cloud-based AI solutions.

What is PrivateGPT?

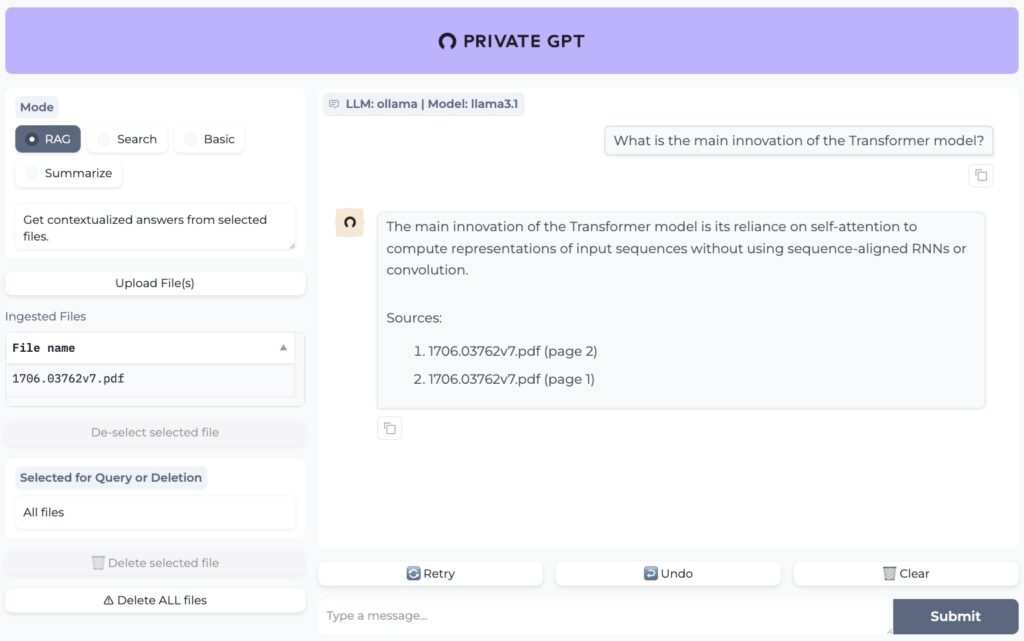

PrivateGPT is a production-ready implementation of a document-based question answering system using a Retrieval-Augmented Generation (RAG) approach. The platform includes:

- Local document ingestion: parsing, chunking, metadata extraction, embeddings generation and storage.

- Contextual queries: retrieving relevant chunks and generating LLM-powered answers.

- Full offline capability: no data ever leaves the local execution environment.

It ships with a FastAPI-based server compatible with OpenAI’s API spec, and a Gradio-powered web UI, making it easy to explore, prototype, and integrate within existing workflows.

Privacy-first architecture built for extensibility

The architecture of PrivateGPT is based on LlamaIndex, ensuring flexibility and modularity. Key design principles include:

- Dependency injection, enabling easy component replacement.

- Service/API separation, for clearer code organization.

- Minimal abstractions, to simplify customization and deployment.

It supports integration with leading tools like Qdrant (vector DB), LlamaCpp (local LLMs), and SentenceTransformers (embeddings), while also including useful tooling for ingestion scripts, model download automation, and folder monitoring.

Enterprise-grade deployments with European infrastructure

PrivateGPT has proven ready for enterprise use cases where data protection is critical. It can be deployed on-premise, on bare-metal servers, or in fully private clouds.

One key option for European businesses is to deploy PrivateGPT on Stackscale infrastructure —a European cloud and bare-metal provider with data centers in Spain and the Netherlands. Stackscale offers dedicated servers with GPU support, ideal for running generative AI workloads locally, while complying with GDPR and European data residency requirements.

This setup provides an ideal alternative for organizations that cannot rely on public cloud platforms like AWS, GCP, or Azure due to regulatory or security concerns.

From open source experiment to enterprise-ready platform

Originally launched in May 2023, PrivateGPT quickly became a leading reference for those looking to use LLMs in fully private, offline environments. It also laid the foundation for Zylon, a commercial AI workspace designed for regulated industries and recently integrated into Telefónica Tech’s AI portfolio.

PrivateGPT continues to evolve with contributions from a growing open source community, and remains a flexible and powerful base for building privacy-respecting AI solutions.

Join the community

PrivateGPT is open for contributions and offers a clear development structure with testing, formatting, and type-checking tools included. All documentation —from setup and deployment to API usage and UI features— is available at: https://docs.privategpt.dev/

PrivateGPT provides a practical and secure path to adopting generative AI —with no compromises on data control. Whether deployed on local servers or trusted European providers like Stackscale, it empowers organizations to unlock AI’s potential while retaining full ownership of their information.