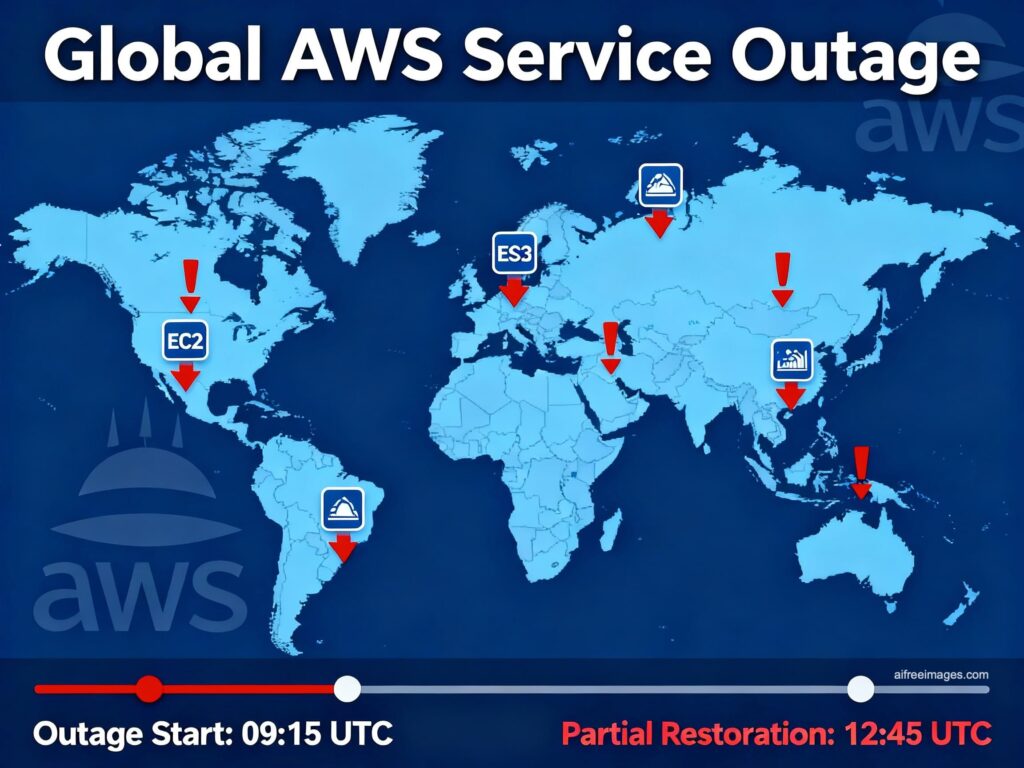

Monday’s AWS outage (Oct 20) once again showed how fragile our digital fabric becomes when too many parts hinge on the same point. In Spain, services as diverse as Bizum, Ticketmaster, Canva, Alexa, and multiple online games slowed down or went dark for hours. The epicenter was US-EAST-1 (N. Virginia): a DNS issue affecting DynamoDB cascaded through EC2, Lambda, load balancers, and dozens of dependent services.

Beyond the immediate incident, one conclusion stands out: we still over-concentrate risk in one region of one hyperscaler, and Europe continues to lack pre-planned alternatives when a giant stumbles. As David Carrero, co-founder of Stackscale (grupo Aire), put it: “Many companies in Spain and Europe bet everything on a US hyperscaler and don’t have a plan B even for critical services. HA is necessary, but if everything depends on a common element, HA fails.”

What happened (and why Spain felt it)

- Trigger: DNS resolution failures for DynamoDB in US-EAST-1.

- Cascade: EC2 instance launches failed, Network Load Balancer health checks broke, Lambda invocations errored; queues filled up and throttling kicked in while services recovered.

- Impact in Europe: Even workloads hosted in EU regions rely on global control planes and US-EAST-1-anchored dependencies (identity, queues, orchestration). Hence, in Spain we saw login failures, 5xx pages, latency spikes, and backlogs, despite “being in Europe.”

“We see this pattern because so few architectures are truly multi-region,” Carrero notes. “Control planes in a single region, data centralized for convenience, and failovers never rehearsed. When a big bump arrives, we all stop.”

Technical takeaways from the outage

- US-EAST-1 can’t be the ‘one-size-fits-all’: convenient and cheap—also a systemic risk.

- Multi-AZ ≠ resilience: when a cross-cutting component fails (e.g., a DNS tier of a core service), all AZs suffer.

- A plan B must be practiced: a runbook without regular gamedays is just paper.

- Observability and DNS matter: if your monitoring and identity also depend on the impacted region, you go blind precisely when you need visibility.

- Communication: clear, frequent updates reduce uncertainty and support load.

What cloud-first architectures should change now

1) True multi-region

Decouple control planes and data, and practice failovers. “Not everything needs active-active, but the critical pieces do,” says Carrero. “Define realistic RTO/RPO, then design for them—not the other way around.”

2) DNS/CDN with failover

Use GTM/health checks based on service behavior, not pings; configure alternate origins in your CDN. Avoid implicit anchors to a single region.

3) Backups that restore (on the clock)

Keep immutable/air-gapped backups and run timed restore drills. A backup exists when it’s restored.

4) Global dependencies under control

Identify “global” services that anchor in US-EAST-1 (IAM, queues, catalogs, global tables) and prep alternatives or mitigations.

5) Selective multicloud (when it truly helps)

For continuity, sovereignty, or regulatory reasons, introduce a second provider for the minimum viable set: identity/registry, backups, DNS, observability.

“It’s not about abandoning hyperscalers,” Carrero stresses, “it’s about de-concentrating risk and gaining resilience.”

Mission-critical patterns that actually work

Active/Active multi-region (same cloud)

- Use for: payments, core identity, transactional heartbeats.

- How: (near-)synchronous replication where budget allows; quorum-based distributed DBs; conflict-free counters/state (CRDTs, sagas).

- DNS/GTM: health checks that exercise features, not just TCP/443.

- Trade-off: cost and engineering, but best RTO/RPO.

Warm Standby (active/passive hot)

- Use for: most important, non-core services.

- How: async replication, pre-provisioned standby, promotion in minutes.

- DNS: automatic failover with degrade modes (read-only, limited features).

- Trade-off: you accept RPO>0 (bounded data loss).

Selective multicloud

- Patterns:

- Pilot-light (minimal always-on set in cloud #2: IdP, DNS, logs/SIEM, status page).

- Hot-hot only for a small, truly critical subset.

- Move: continuity layers, immutability, break-glass identity to an alternate provider.

- Goal: reduce concentration risk without duplicating everything.

Data & replication

- Transactional: distributed DBs with regional quorums; sagas/event sourcing for complex ops.

- Objects: versioning + cross-region replication, legal holds (immutability), air-gaps.

- Catalogs/queues: know where “global” services really anchor and swap for regional alternatives if your RTO/RPO needs it.

Network & DNS

- Two DNS providers and GTM with service-grade health checks.

- Multi-CDN with origin shielding and alt-origins (A/A or A/P).

- Private links (redundant) between cloud and private infra, ideally with an overlay SD-WAN.

Identity & access

- Resilient IdP (cached JWKS with TTL; contextual re-auth rules).

- Break-glass accounts outside the failure domain, with strong MFA (hardware keys).

- App governance to contain consent phishing and OAuth abuse.

Observability outside the same failure

- Mirror telemetry to another region or provider; keep status pages and operator dashboards off the impacted domain.

Backups that actually restore

- Immutable/disconnected copies; timed restores become regular drills.

App architecture

- Idempotency + jittered retries; circuit breakers and bulkheads so one fault doesn’t capsize the fleet.

- Decouple with queues/events; limit session state; caches with TTLs that support graceful degradation.

- Degrade modes: read-only, feature flags to disable heavy paths.

Chaos engineering & gamedays

- Drill failures of region, IdP, DNS, queues, DB.

- Measure true RTO, failure dwell time, and MTTR for cutover.

Sovereignty and the European complement

You don’t have to give up hyperscalers—but you can balance. “Europe has many winning options we tend to undervalue due to the ‘big cloud pressure’,” says Carrero. “Not only Stackscale can be an alternative or complement; the European and Spanish ecosystem—private cloud, bare-metal, housing, connectivity, backup, managed services—is broad and mature. For 90% of needs that aren’t ‘monster-scale AI’, there’s nothing to envy.”

Practical strategy:

- Place critical data/apps on European infrastructure (private/sovereign) and connect to SaaS/hyperscalers where it adds value.

- Ensure continuity layers (observability, DNS, backups, break-glass identity) are outside the same failure domain.

- Demand firm SLAs, 24/7 support, and exit plans (portability of logs, data, backups).

Resilience checklist

- Signed RTO/RPO per service (by the business).

- Dependency map (who breaks what), including global anchors (US-EAST-1).

- Failover runbooks practiced (quarterly gamedays).

- Immutable backups + recent timed restore success.

- Multi-provider DNS/GTM + multi-CDN with alt-origins.

- IdP with break-glass + key cache; app governance active.

- Observability outside the same cloud/region.

- Feature flags for controlled degradation; circuit breakers in place.

- Continuity partner(s) in Europe (DR, housing, bare-metal, support).

- Executive metrics: % phishing-resistant MFA, TTR, patch latency for exposed assets, restore success rate.

Bottom line

This isn’t about “running away from cloud”; it’s about designing for failure and de-concentrating single points of brokenness. As Carrero puts it, “HA is not plan B; plan B is a complete alternate route to the same outcome.” Spain and Europe have the technical muscle to complement hyperscalers: distribute risk, prove continuity, and raise the bar for mission-critical operations. The next outage isn’t if—it’s when. The difference between a scare and a crisis will be, once again, preparation.