Time to First Byte (TTFB) is the time from a user’s HTTP request to the moment the browser receives the first response byte from your server (or CDN). It spans DNS → connect → TLS → request queueing → application work → first byte. While not a Core Web Vital, TTFB influences LCP, INP (via network backlog/queuing), user-perceived snappiness, crawl budgets, and conversion on slow/variable networks.

Below is a practical, sysadmin-friendly guide: what drives TTFB, how to measure it correctly, and a prioritized, step-by-step plan to reduce it without breaking your stack.

1) What exactly does TTFB include?

A “classic” breaking-down for an HTTPS request:

- DNS resolution (recursive resolver to authoritative).

- TCP/QUIC connect (handshake; for TCP also slow-start).

- TLS handshake (cert exchange, key agreement; resumption/0-RTT can shorten this).

- Request send (client → edge/origin).

- Server queueing (waiting for a worker/thread).

- Application work (routing, DB queries, cache lookups, templates).

- First byte leaves the server and reaches the browser.

TTFB captures the whole chain up through (7). If any one piece drags—high RTT, cold Lambda/Functions, slow DB, overworked PHP-FPM—TTFB grows.

Rules of thumb (good global sites):

- p50 ≤ 200–300 ms in-region, ≤ 500–700 ms intercontinental.

- p95 should not be 3–5× your p50. High tail latency = contention, GC pauses, DB locks, cold starts, or bad peering.

- Focus on percentiles (p50/p90/p95/p99), not just averages.

2) How to measure TTFB (properly)

Use multiple vantage points and layers:

- Real User Monitoring (RUM): TTFB as seen by real browsers across ISPs/regions. Segment by country/ASN/device.

- Synthetic: headless browsers/cURL from multiple regions. Test:

- Direct to origin and via CDN (compare delta).

- With and without cold caches (serverless cold starts, microservice wakeups).

- Server metrics: accept queue length, web workers busy, DB slow query log, cache hit ratios, TLS handshake stats, CPU steal/wait, GC pauses.

- Network path: traceroute/MTR/HTTP/3 reachability; verify DNS is close to users (EDNS Client Subnet/GeoDNS correctness).

Check the waterfall: resolving → connecting → TLS → waiting (TTFB) → content. A long “waiting” often means application (or origin) work; a long connect/TLS points to network/TLS.

3) The TTFB improvement playbook (priority ordered)

A. Put an optimized edge in front (CDN/reverse proxy)

- Terminate TLS at the edge (TLS 1.3, HTTP/2 and HTTP/3/QUIC enabled).

- Use Anycast + good peering to be physically/network-close to users.

- Enable origin shielding (one regional “shield” PoP fetches from origin; others cache from shield) to slash origin fan-out.

- Cache aggressively where legal:

- Set

Cache-Control: public, max-age=..., stale-while-revalidate=... - For static: immutable (

max-age=31536000, immutable) with content hashes in filenames. - For semi-static HTML: short TTL (e.g., 30–120 s) + SWr to hide revalidation cost.

- Set

- For dynamic pages, cache fragments/ESI/edge includes or use edge compute for personalization at the edge while keeping the base HTML hot.

Why it helps: The edge knocks out DNS/connect/TLS RTT and avoids origin trips for hot content; first byte can come from a nearby PoP in tens of ms.

B. Reduce handshake & transport overhead

- TLS 1.3 everywhere; enable session resumption and, if appropriate, 0-RTT for idempotent GETs.

- Prefer HTTP/2 (multiplexing) and HTTP/3/QUIC (no TCP slow-start, faster loss recovery on mobile/wifi).

- Keep keep-alives long enough to reuse connections across requests; avoid connection churn.

- Ensure OCSP stapling is on; certificates sized reasonably; ECDSA preferred for lighter handshakes.

- On origins under your control:

- Use modern TCP congestion control (e.g., BBR) if it performs better on your network.

- Make sure NIC offloads (TSO/GRO) and MTU are sane; avoid PMTUD black holes.

C. Kill redirect chains and geo-mismatches

- Collapse

http → https → www → locale → finalinto one hop (or HSTS preload + canonical). - Make sure DNS/edge geolocation aligns with end-users; wrong GeoDNS can send EU users to US PoPs, adding 80–120 ms.

D. Make the origin fast (when you must hit it)

- Web server

- Nginx: worker_processes auto;

worker_connectionssized;keepalive_requests 1000+; enablehttp2/quic(if available);sendfile on; tcp_nodelay on; tcp_nopush on;. - Apache: mpm_event;

KeepAlive On;MaxRequestWorkerssized to CPU/IO;Protocols h2 h2c http/1.1.

- Nginx: worker_processes auto;

- App runtime

- Pooling: PHP-FPM, Node.js cluster/PM2, JVM thread pools sized via profiling.

- Opcode caches/JIT (OPcache), warmed bundles, avoid dev-mode.

- Avoid synchronous external calls on request path; move to async/queue.

- Database

- Add caching layer (Redis/Memcached) for read-heavy paths; set proper TTLs/keys.

- Indexes on hot queries; use the DB slow log; reduce N+1 queries.

- Connection pool tuned (not too small to queue, not too big to thrash).

- Static offload

- Serve images/CSS/JS from the CDN; don’t let origin waste CPU on bytes.

- Serverless / Functions

- Use provisioned concurrency or warmers for endpoints with strict TTFB targets.

- Trim bundle size and cold-start dependencies; keep connections warm to backends.

E. Smarter HTML caching for dynamic sites

- Cache entire HTML for anonymous users; personalize via:

- Edge keys (cookie-less) and client-side fetch for private bits.

- Signed/cryptographic cookies as cache keys only when needed (beware of cache fragmentation).

- For logged-in users, cache expensive fragments (menus, product tiles, recommendations) at the edge and stitch server-side or via client hydration.

F. Control queueing & overload

- Cap concurrency to the sustainable level:

- Use queueing (e.g., limited worker pool + 503 with

Retry-After) rather than over-committing and inflating TTFB for everyone.

- Use queueing (e.g., limited worker pool + 503 with

- Apply circuit breakers for slow/back-pressure-notified dependencies (payments, search, recommendation API).

- Auto-scale before queues explode; scale based on queue depth and p95 latency, not only CPU.

G. Don’t break caches

- Vary only on what you must:

Vary: Accept-Encodingis fine; avoidVary: *or volatile cookies. - Normalize query strings; strip trackers before cache lookups where allowed.

- Use consistent casing in headers/hosts; cache keys should be stable.

- For APIs, design cacheable GETs with ETags/Last-Modified.

4) A pragmatic 14-day TTFB improvement plan

Day 1–2: Measure & baseline

- RUM dashboard segmented by country/ASN/device; record p50/p95 TTFB.

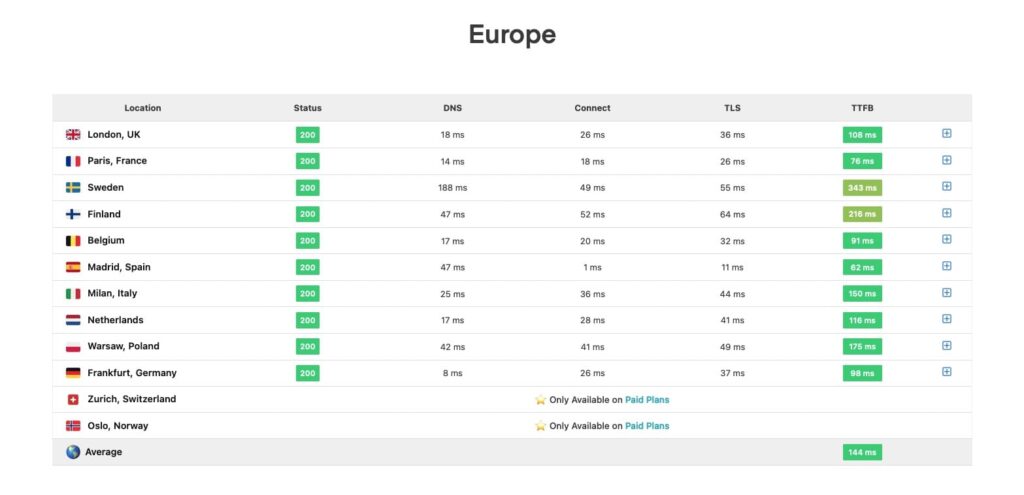

- Synthetic runs from 5–10 regions: CDN vs origin, HTTP/2 vs HTTP/3, warm vs cold caches.

- Capture server metrics (web workers busy, accept queues, DB p95, cache hit ratio).

Day 3–5: Quick network wins

- Ensure TLS 1.3, resumption, OCSP stapling, and HTTP/2 + HTTP/3 at the edge.

- Fix redirect chains; force canonical routes; enable HSTS.

- Confirm users hit nearest PoP; correct GeoDNS/peering issues with your provider.

Day 6–9: Cache more

- Static assets: long-TTL immutable + hashed filenames.

- HTML for anon users: enable short-TTL caching + stale-while-revalidate.

- Turn on origin shielding; inspect origin hit reduction.

Day 10–12: Origin / app

- Profile app endpoints with high TTFB; add Redis read-through where viable.

- Fix top slow queries; add missing indexes; lift connection pool caps if queueing.

- Tune PHP-FPM/Node/JVM pools to avoid both thrash and starvation.

Day 13–14: Guardrails

- Add autoscaling triggers on p95 latency & queue depth.

- Add SLO alerts: TTFB p50/p95 per main geos; synthetic canary checks for login/checkout.

Re-measure; expect p50 drop and smaller p95 gap. Iterate on the worst geos/ASNs.

5) Special cases

- E-commerce & personalization: cache category/listing pages fully; for PDP add fragment cache for reviews/recommendations; push cart/account data via client fetch.

- APIs: implement ETag/If-None-Match,

Cache-Control: public, max-agewhere safe; coalesce duplicate backend requests at the edge (request collapsing). - Serverless: use provisioned concurrency for hot paths; move auth/session validation to edge when possible.

6) Validation: did it work?

- Graphs: TTFB p50/p95 per region before/after; cache hit ratios; origin requests per 1k hits; DB p95.

- Tail reduction: p95/p99 improvement is often worth more than a small p50 drop.

- User outcomes: LCP improvement, conversion/engagement, crawl rate (for SEO-sensitive sites).

7) Common pitfalls that inflate TTFB

- Accidentally disabling CDN cache for HTML via set-cookies or overly broad

Vary. - Per-user cache keys (cookie explosion) killing hit ratio.

- Redirect chains for locale or A/B experiments.

- Serverless cold starts on peak traffic.

- DB connection pools too small (queueing) or too large (thrash).

- Edge → origin fan-out without shielding.

FAQ

Is TTFB the same as LCP?

No. TTFB ends at the first byte; LCP measures when the largest above-the-fold element renders. Lower TTFB generally helps LCP but they’re distinct.

Can HTTP/3 alone fix poor TTFB?

It can shave handshake/transport overhead and improve loss recovery, especially on mobile/wifi, but application and origin latency still dominate dynamic pages.

What’s a “good” TTFB target?

Aim for ≤ 200–300 ms p50 in-region and ≤ 500–700 ms intercontinental; keep p95 within ~2–3× p50. Targets depend on product and audience.

Does caching HTML hurt personalization/analytics?

Not if you split public content (cacheable) and private bits (hydrated client-side or served as edge fragments). Avoid per-user cache keys unless necessary.

Why did my TTFB worsen after adding a WAF/CDN?

Usually mis-routing (wrong PoP/GeoDNS), disabled cache, or extra redirects. Validate POP proximity, cache rules, and TLS/HTTP versions.

Bottom line

TTFB is a composite metric: network + TLS + server + app. The fastest path to better TTFB is a good edge (HTTP/2/3, TLS 1.3, shielding, smart caching) plus a disciplined origin (pooling, DB/indexes, fragment caching). Measure from the user’s perspective, attack tail latency, and iterate region-by-region.