Cloudflare has released VibeSDK, an open-source platform anyone can self-host to generate, preview and deploy full-stack applications from natural-language descriptions. This is not another code assistant that stops at snippets. VibeSDK plans the build, generates a multi-file codebase, runs a live preview in an isolated sandbox, reads console output to self-correct, and then deploys the resulting app across Cloudflare’s global edge.

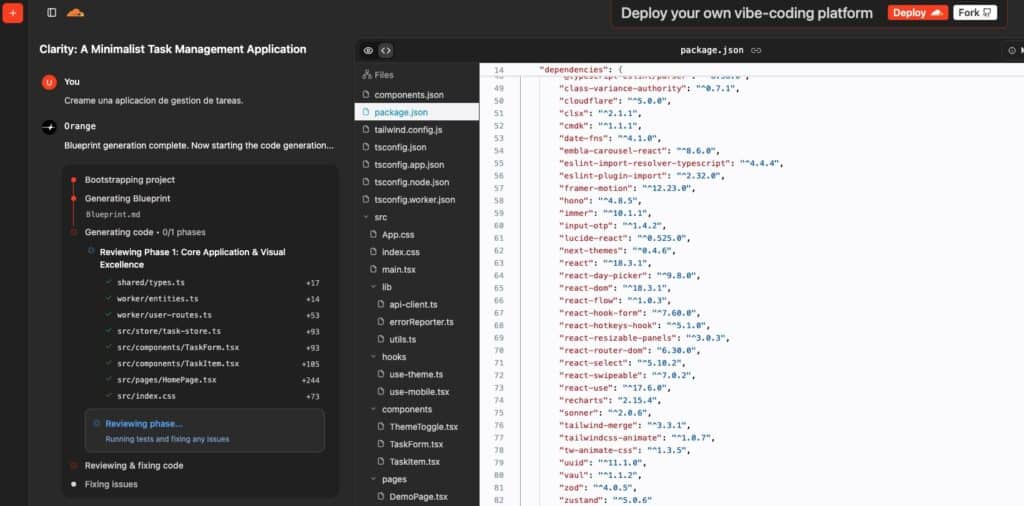

To stress-test the claim, the team built a concrete example: Clarity — A Minimalist Task Management Application. Starting from a tight brief (“zero-noise task manager with keyboard flow, filters, light/dark modes and persistence”), the platform scaffolded the project, compiled it inside a sandbox, published a public preview URL and, when it passed lint/type checks, promoted it to production. Finally, the code was exported to a repository for conventional iteration.

What VibeSDK actually delivers

VibeSDK packages the whole path from idea to running software:

- User-level isolation — every person gets a dedicated container, so one experiment cannot interfere with another.

- Phase-wise generation — the agent drafts a blueprint, lays out the file tree, installs dependencies and produces React + TypeScript + Tailwind progressively (foundation, core logic, styling, integrations, optimizations).

- Live preview — a dev server boots inside the sandbox, and a public URL spins up instantly to validate with product, design or marketing.

- Self-healing — if lint, type checks or the console fail, the agent reads the error, fixes dependencies or code, and retries.

- Deployment — once steady, the app is promoted to Workers for Platforms so each build runs as an isolated edge instance with its own URL.

- No lock-in — the generated code can be exported to GitHub or to the team’s Cloudflare account to continue outside the platform.

Under the hood, VibeSDK sits on Cloudflare Workers, Durable Objects (stateful AI agents), D1 (SQLite at the edge) with Drizzle ORM, Cloudflare Containers for per-user sandboxes, R2/KV for assets and sessions, and Workers for Platforms with dispatch namespaces for multi-tenant deployment. Crucially, AI Gateway fronts multiple model providers (OpenAI, Anthropic, Google), adds caching, latency metrics, token accounting and cost controls. If several users ask for “a basic todo app,” the gateway can serve a cached response instead of paying for repeated inferences.

The Clarity test case: tasks without the noise

The brief

A focused app: create, complete and clean tasks with minimal friction. No heavy navigation. Two themes (light/dark), keyboard-first (Enter, Shift+Enter, Cmd/Ctrl+K), filters (all, active, completed), counter, persistence and lightweight sync.

The initial prompt

“Create a minimalist task manager called Clarity. Clean interface, legible typography, light/dark theme, keyboard shortcuts (add, complete, clear completed, quick-focus), filters and counter. Persist to D1, sync across tabs. Export with React + TypeScript + Tailwind.”

Phase 1 — Blueprint & scaffold

VibeSDK proposed a file structure (/src/components, /src/hooks, /src/routes, /src/lib), generated package.json, Vite, Tailwind and ESLint/TS configs, defined a Durable Object room for cross-tab sync, and created a D1 schema (todos with id, title, done, created_at, updated_at).

Phase 2 — Core UI

The agent rendered a clean layout: top bar with theme toggle, centered input, list with checkboxes, filters and counter. Tailwind styles included accessible contrast tokens and visible :focus states; dark mode was wired through data-theme.

Phase 3 — Logic & hotkeys

A small useHotkeys hook bound Enter (add), Ctrl/Cmd+K (quick focus to input or command palette), Ctrl/Cmd+Backspace (clear completed) and arrows for list navigation. State was local for responsiveness, while a Durable Object broadcast kept multiple tabs in sync.

Phase 4 — Persistence & API

A Workers router exposed GET/POST/PATCH/DELETE /api/todos. Drizzle handled migrations to D1. A front-end service layer wrapped the fetchers. Type errors in early console runs (e.g., Response.json() DTO mismatches) were auto-fixed by the agent adjusting types and parsing.

Phase 5 — Polish & deployment

Lint and type checks passed; Tailwind warnings were resolved automatically, tsconfig tuned. When the preview stayed green, the app was deployed as a Worker for Platforms and returned a public production URL for Clarity.

Functional outcome

- Add, complete, inline edit and delete with keyboard flow.

- Filters and a live counter; “clear completed” via click or shortcut.

- D1 persistence with migrations; cross-tab sync via Durable Object.

- Light/dark theme remembered between sessions.

- Export to GitHub for CI/CD, testing and long-term maintenance.

Lessons from building Clarity

1) The “last mile” is where value sits

Code generation is only the opening move; the repair loop matters. VibeSDK’s ability to read logs, reconcile types and retry pushes the experience closer to a tireless senior engineer who chases a stack trace until it’s gone.

2) Edge-first by default

With Workers for Platforms, every app becomes an isolated, globally deployed instance with its own URL and low latency. No cluster sizing, no orchestrator boilerplate. For preview, Cloudflare Containers are tiered; standard was ample for Clarity.

3) Cost awareness baked in

AI Gateway trims redundant inference costs through caching, and emits the latency and token telemetry ops teams need to choose the right model per task. In any multi-user installation, that layer avoids end-of-month budget surprises.

4) Export or it didn’t happen

Handing off to GitHub and continuing with the team’s pipeline (PRs, tests, lint, preview envs) removes fear of platform dependency. The platform accelerates the 0→1; the team retains ownership for 1→n.

How it scales: thousands or millions of apps

Each generated application deploys as an isolated Worker instance with a unique subdomain. Thanks to the edge footprint, the platform can fan out horizontally without wiring a bespoke PaaS. Previews run in Cloudflare Containers with selectable instance types:

- dev — 256 MiB, 1/16 vCPU, 2 GB (dev/test)

- basic — 1 GiB, 1/4 vCPU, 4 GB (light apps)

- standard — 4 GiB, 1/2 vCPU, 4 GB (default for most)

- enhanced — 4 GiB, 4 vCPUs, 10 GB (Enterprise)

The SANDBOX_INSTANCE_TYPE variable tunes preview performance, compile/build times and concurrent capacity.

What teams need to deploy it

VibeSDK ships with a one-click “Deploy to Cloudflare” flow. You’ll need:

- A Cloudflare account with a paid Workers plan, Workers for Platforms subscription, and Advanced Certificate Manager if you map a first-level wildcard (e.g.,

*.apps.yourdomain.com) for previews. - A Google Gemini key (

GOOGLE_AI_STUDIO_API_KEY). - Secrets such as

JWT_SECRET,WEBHOOK_SECRET,SECRETS_ENCRYPTION_KEY, plus aCUSTOM_DOMAINalready managed on Cloudflare. - A wildcard CNAME (

*.subdomain) pointing to your base domain so preview URLs resolve correctly.

The deploy flow provisions the Worker and creates a repository in your account. Pushes to main auto-publish. A bun run deploy script reads secrets from .prod.vars. OAuth (Google/GitHub) can be added after the first deploy if you require login gating.

Security posture

By default, VibeSDK encrypts secrets with Cloudflare KMS, runs per-user sandboxes, sanitizes inputs, applies rate limiting to reduce abuse, integrates content safety for generated output, and writes audit logs for generation activities. The troubleshooting guide covers permission hiccups, D1 provisioning delays, AI Gateway auth, missing vars and container tier misconfigurations.

Where it fits inside an organization

- Internal teams — marketing spins up landing pages, sales creates ad-hoc dashboards, support assembles micro-tools; preview links make stakeholder approval frictionless.

- SaaS platforms — customers can extend products by describing integrations or workflows instead of learning an API.

- Education — students learn by describing and seeing software run; generated code doubles as teaching material for patterns and pitfalls.

- Agencies — prototypes in hours for client pitches, then export to the client’s repo for handover.

Constraints (and responsibilities)

VibeSDK doesn’t remove engineering judgment. Architecture, security and data handling remain team decisions. If you need corporate SSO, regulated PII, rigorous auditing or industry-grade compliance, extend the platform with your templates, components, validators, OAuth, secret policies and rate controls. The platform gets you to working software fast; turning it into a production program is about integrating it into your SDLC, reviews and monitoring.

Try it yourself

- Live experience —

build.cloudflare.dev - One-click deploy — requires Workers + Workers for Platforms, a domain and a Gemini key

- Configure vars —

GOOGLE_AI_STUDIO_API_KEY,JWT_SECRET,WEBHOOK_SECRET,SECRETS_ENCRYPTION_KEY,CUSTOM_DOMAIN, plus the wildcard CNAME for previews - Optional — add OAuth (Google/GitHub) after first deploy

- Export — send to GitHub and keep iterating with your CI/CD

Why this matters right now

The last few years proved that models can write code. What blocked adoption was the operational gauntlet: wiring dependencies, running the stack, reading failures, fixing them, getting a URL, scaling multi-tenant, and putting guardrails around cost. VibeSDK tackles that last mile with isolation per user, instant previews, automated promotion to the edge, AI Gateway for cost telemetry and caching, and clean export paths.

In Clarity’s case, a prompt became usable software—previewed, deployed and exported—in roughly the time it used to take to argue about scaffolding. For many teams, that’s the difference between experimenting with AI and shipping with AI.

FAQs

Can VibeSDK follow our stack conventions (UI, security, data patterns)?

Yes. It’s open source. Inject your templates, internal component libraries, lint rules, security hooks and validators. The goal is for the agent to generate in your engineering dialect, not a generic one.

How does it keep inference costs in check across models?

Via AI Gateway: multi-model routing (OpenAI, Anthropic, Google), caching of common requests, token accounting, latency monitoring and basic cost tracking. Prompts like “a basic todo app” can be answered from cache to avoid repeat spend.

What happens when apps become complex?

Scale previews by stepping up the sandbox tier (standard → enhanced) and rely on Workers for Platforms for global execution. Because your project is exported, you can split heavy tasks into dedicated services later.

Are we locked into the platform after the initial build?

No. You can export to GitHub or to your Cloudflare account and continue independently. VibeSDK accelerates the start; ownership remains with your team.