The ARM (Advanced RISC Machine) processor has become one of the most influential technologies in modern computing. It powers billions of devices worldwide, from smartphones to servers and IoT devices. However, its journey began humbly in the 1980s as a project at Acorn Computers, a British company specializing in personal computers. This article delves into the origins, development, and global impact of ARM processors, tracing their evolution from a niche computing solution to a dominant force in modern technology.

Acorn Computers and the Birth of ARM

Acorn’s Early Success

Acorn Computers was a key player in the British microcomputer industry during the late 1970s and early 1980s. The company gained prominence with the release of the BBC Microcomputer in 1982. Commissioned by the British Broadcasting Corporation (BBC), this computer became a staple in educational institutions across the UK. It was based on the 8-bit 6502 processor, the same chip that powered the Apple II. The BBC Micro featured 32 KB of RAM and had interfaces for floppy and hard disk drives, making it a competitive product in the burgeoning home computing market.

The Need for a More Powerful Processor

By the early 1980s, Acorn recognized the limitations of existing 8-bit processors. The computing industry was shifting toward 16-bit and 32-bit architectures, and Acorn needed a high-performance processor for its next-generation computers. The company explored existing solutions from Intel, Motorola, and National Semiconductor but found them either too expensive or lacking the required efficiency.

The Development of the First ARM Processor

Creating a New Architecture

In 1983, Acorn began developing its own processor, drawing inspiration from the Reduced Instruction Set Computing (RISC) principles developed at UC Berkeley and IBM. The goal was to create a simple yet efficient 32-bit processor that retained the efficiency of the 6502 while providing higher performance.

The project was spearheaded by Steve Furber, now a professor of computer engineering at the University of Manchester, and Roger Wilson, who had worked on the BBC Micro. They aimed to design a chip that was simple, easy to fabricate, and highly efficient. The key design choices included:

- A fixed instruction length to streamline execution.

- A load/store architecture, meaning all data had to be loaded into registers before processing.

- Minimal transistor count, reducing power consumption and manufacturing costs.

ARM1: The First ARM Processor

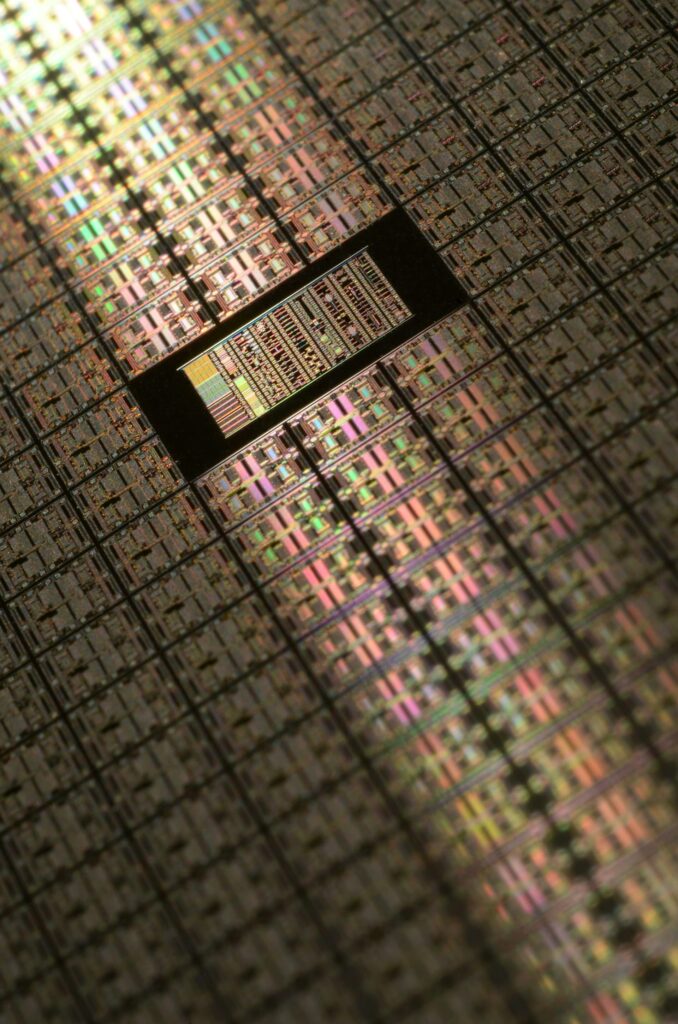

In April 1985, Acorn received working silicon for the first ARM processor, ARM1, fabricated using a 3-micron process at VLSI Technology. The chip exceeded expectations, achieving high efficiency while using fewer than 25,000 transistors. This was a remarkable achievement at the time, as contemporary processors from Intel and Motorola required significantly more transistors.

ARM1 was used internally at Acorn for development but was never commercially released. It served as the foundation for the next iteration of the architecture, ARM2.

ARM2 and Commercial Adoption

The ARM2, released in 1986, introduced significant improvements, including:

- Multiply and accumulate instructions, enabling real-time digital signal processing for audio applications.

- A coprocessor interface, allowing extensions like floating-point accelerators.

- Low transistor count, maintaining the efficiency of the original design.

The first commercial implementation of the ARM2 came with the Acorn Archimedes, launched in 1987. This computer featured an 8MHz ARM2 processor, making it one of the fastest personal computers of its time. However, despite its impressive hardware, the Archimedes struggled to gain traction against the IBM PC standard, which dominated the business computing market.

ARM3 and Expanding the Market

Acorn continued to refine its processor design, leading to the release of the ARM3 in 1989. This iteration introduced a 4 KB on-chip cache, significantly improving performance. Acorn leveraged ARM3 in new desktop computers, but the company still faced challenges in competing with IBM-compatible PCs.

Around this time, interest in ARM’s architecture grew beyond Acorn. The processor’s efficiency caught the attention of other technology companies, setting the stage for a major transition.

Formation of ARM Ltd. and the Mobile Revolution

Apple’s Interest and the Birth of ARM Ltd.

By the early 1990s, Apple was developing its Newton MessagePad, an early personal digital assistant (PDA). Apple needed a low-power, high-performance processor for the device and saw potential in ARM’s architecture. In 1990, Acorn, Apple, and VLSI Technology formed Advanced RISC Machines Ltd. (ARM Ltd.), an independent company dedicated to the development and licensing of ARM processors.

ARM Ltd. adopted a fabless business model, meaning it designed processors but did not manufacture them. Instead, it licensed the designs to semiconductor companies, who then integrated ARM cores into their chips. This model allowed ARM to rapidly scale without the financial burden of maintaining fabrication facilities.

ARM’s Rise in the Mobile Market

During the 1990s and early 2000s, ARM processors became the standard for mobile and embedded devices due to their low power consumption. Companies like Nokia, Texas Instruments, and Qualcomm adopted ARM designs for their mobile chips. The ARM7TDMI, introduced in 1994, became one of the most widely used microprocessors in mobile phones, gaming consoles, and industrial applications.

Apple’s iPhone, launched in 2007, further cemented ARM’s dominance in mobile computing. The iPhone’s processors, based on ARM architecture, delivered high performance while maintaining power efficiency—critical for battery-powered devices.

ARM’s Expansion into New Markets

Server and Cloud Computing

In the 2010s, ARM began expanding beyond mobile devices into servers and data centers. Companies like Amazon, Microsoft, and Google explored ARM-based solutions to improve energy efficiency in cloud infrastructure. Today, ARM processors power data centers, offering competitive performance with significantly lower power consumption than traditional x86 chips.

AI and IoT Integration

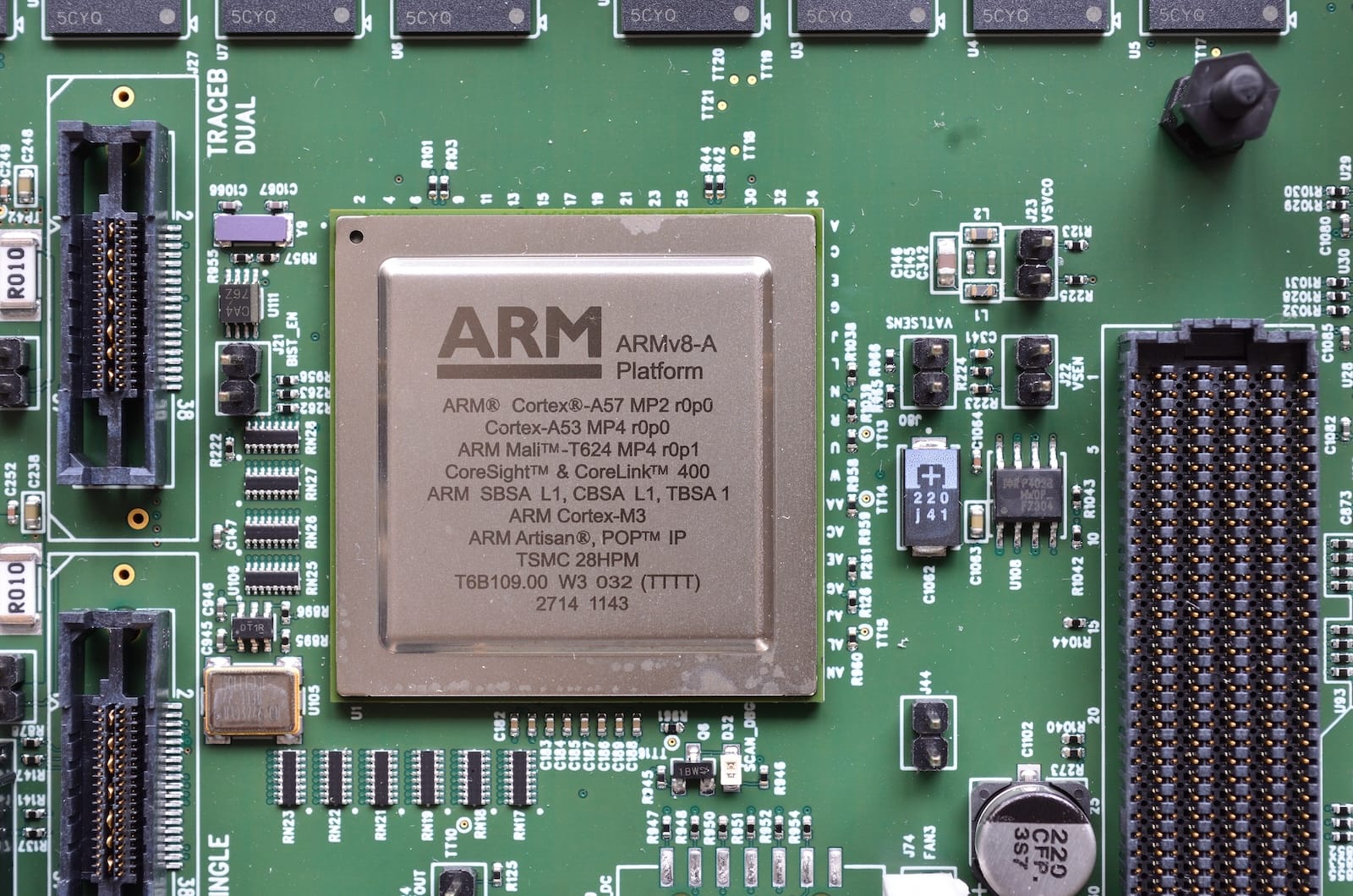

With the rise of artificial intelligence and the Internet of Things (IoT), ARM has continued to innovate. The ARM Cortex series has been optimized for AI workloads, edge computing, and IoT devices. These processors balance performance and energy efficiency, making them ideal for applications ranging from smart home devices to autonomous vehicles.

The Future of ARM

ARM architecture continues to evolve, pushing the boundaries of computing efficiency. The recent acquisition attempts by NVIDIA (which was eventually blocked) and the company’s growing influence in AI and cloud computing highlight its increasing strategic importance. With ARM processors now powering everything from smartphones to supercomputers, the architecture is poised to remain a cornerstone of modern technology for years to come.

Conclusion

What started as a project at Acorn Computers in the 1980s has transformed into a global standard in computing. ARM’s commitment to power efficiency, scalability, and innovation has made its architecture ubiquitous across multiple industries. From its early days in the Acorn Archimedes to its role in modern AI and cloud computing, ARM’s journey is a testament to the power of thoughtful, efficient design in shaping the future of technology.