Computing power has multiplied by hundreds of millions in half a century

In just five decades, humanity has witnessed an unprecedented technological transformation. We have gone from processors capable of performing tens of thousands of instructions per second to chips that execute trillions of operations in the same time. A dizzying evolution that has radically transformed the world, from the humble beginnings of the Intel 4004 to modern giants like NVIDIA Blackwell, the core of today’s artificial intelligence era.

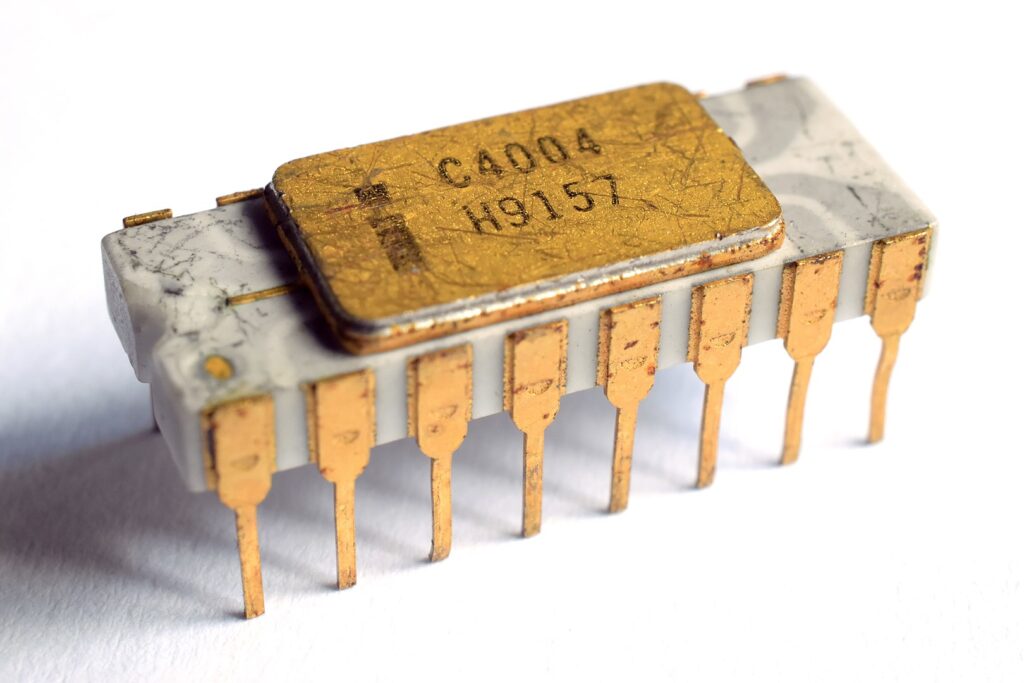

The Birth of an Era: Intel 4004

November 15, 1971 marked a turning point in human history. On that day, Intel, in collaboration with Japanese company Busicom, launched the world’s first commercial microprocessor: the Intel 4004. This 4-bit chip, with a clock frequency of just 740 kHz and a capacity to execute 92,600 instructions per second (IPS), was initially destined for a Japanese desktop calculator.

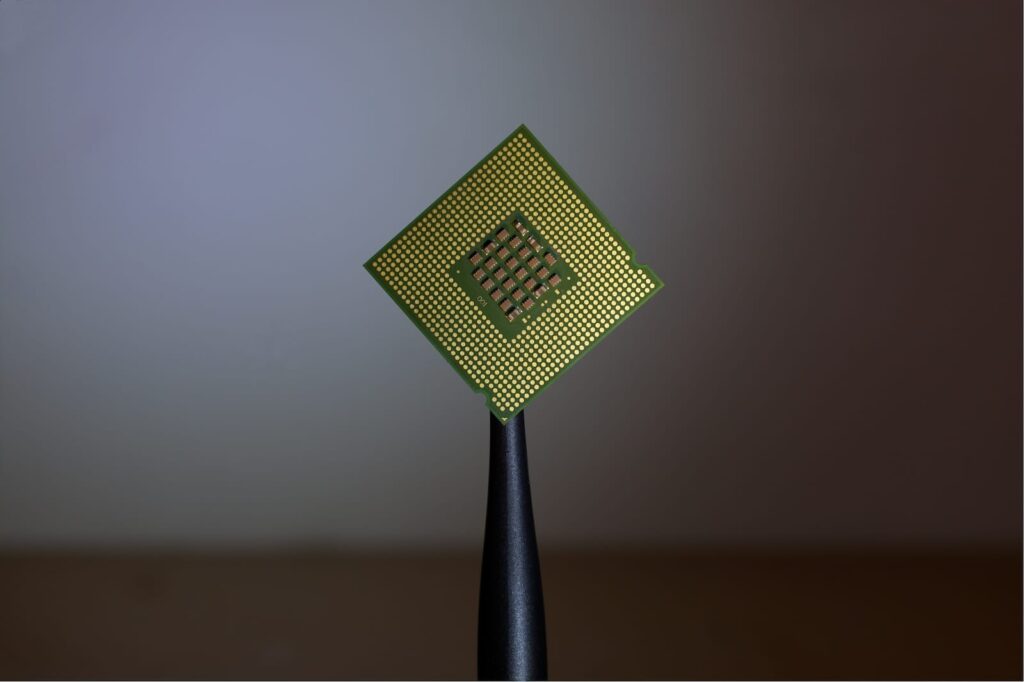

With only 4 KB of program memory and 640 bytes of RAM, it seemed modest even by the standards of that era. However, the 4004 marked a before and after: it opened the door to programmable, versatile, and miniaturized computing. Federico Faggin, Ted Hoff, and Stan Mazor were the minds behind this revolution, with Faggin developing the innovative Silicon Gate technology that made it possible to integrate 2,300 transistors into a fingernail-sized chip.

The story behind the 4004 is fascinating: initially, Busicom had requested 12 custom chips for their calculator. Intel’s engineers proposed a more elegant solution: just 4 chips, including one programmable chip. When the calculator market collapsed during development, Intel repurchased exclusive rights from Busicom for $60,000. A decision that would change the course of technological history.

Moore Was Right: The Prophecy That Shaped Half a Century

In 1965, Gordon Moore, Intel’s co-founder, observed that the number of transistors on a chip doubled approximately every two years. What began as an empirical observation became the compass that guided the industry for decades. Although many forecasters, including Gordon Moore himself, predict that Moore’s Law will end around 2025, it has served as the engine for an unstoppable race to increase computing power.

This trend, combined with innovations in architecture, parallel systems, multi-core, and graphics processing, has resulted in an exponential leap that has led us to multiply computational capacity by hundreds of millions since the 4004. The computational capacity of computers has increased exponentially, doubling every 1.5 years, from 1975 to 2009.

From Humble Silicon to Intelligent Silicon: The Leap to Blackwell

NVIDIA, the undisputed leader in artificial intelligence hardware, has taken this advancement to a new dimension with its Blackwell architecture, named after David Harold Blackwell, a mathematician specializing in game theory and statistics, and the first Black scholar inducted into the National Academy of Sciences.

NVIDIA Blackwell is the largest GPU ever created, featuring 208 billion transistors—more than 2.5 times the transistors in NVIDIA’s Hopper GPUs. It utilizes TSMC’s 4NP process specifically developed for NVIDIA and offers groundbreaking computational performance, reaching 20 petaFLOPS on a single chip.

The Blackwell architecture represents a paradigmatic shift. While the 4004 was a sequential processor designed for calculators, Blackwell is an architecture designed for extreme parallel computing. The GB100 chip contains 104 billion transistors, a 30% increase over the 80 billion transistors in the previous generation Hopper GH100 die. To avoid being constrained by die size, NVIDIA’s B100 accelerator utilizes two GB100 dies in a single package, connected with a 10 TB/s link that NVIDIA calls the NV-High Bandwidth Interface (NV-HBI).

The Magnitude of the Leap: Numbers That Defy Comprehension

To understand the magnitude of this transformation, consider these figures:

- Intel 4004 (1971): 92,600 instructions per second

- NVIDIA Blackwell (2024): Over 20 petaFLOPS (20,000 trillion operations per second)

This represents an increase of approximately 216 million times in processing capacity in just over 50 years. To put this in perspective, it’s as if a car traveling at 1 km/h in 1971 could now travel at 216 million km/h.

Beyond Hardware: A Civilizational Transformation

This evolution has not been merely technical. Computing went from being a niche discipline for scientists and military personnel to becoming the invisible pillar of modern life. From smartphones to language models, autonomous cars to biotechnology, every aspect of our existence is touched by this silent revolution.

As Jensen Huang, NVIDIA’s CEO, recalled during the Blackwell presentation: “For three decades we’ve pursued accelerated computing, with the goal of enabling transformative breakthroughs like deep learning and AI. Generative AI is the defining technology of our time.”

The numbers speak for themselves: The NVIDIA GB200 NVL72 cluster enables accelerated data processing, offering up to 18X faster database query performance than traditional CPUs. NVIDIA Blackwell includes a decompression engine and the ability to access massive amounts of memory in the NVIDIA Grace CPU over a high-speed link—900 gigabytes per second (GB/s) of bidirectional bandwidth.

Present Challenges: When Physics Imposes Limits

However, this exponential race faces unprecedented challenges. The classical technological driver that has underpinned Moore’s Law for the past 50 years is failing and is anticipated to flatten by 2025. Microprocessor architects report that since around 2010, semiconductor advancement has slowed industry-wide below the pace predicted by Moore’s Law.

The obstacles are fundamental:

Physical limits: The speed of light is finite, constant, and provides a natural limitation on the number of computations a single transistor can process. Transistors can already be measured on an atomic scale, with the smallest ones commercially available only 3 nanometers wide, barely wider than a strand of human DNA (2.5nm).

Exponential costs: The cost to manufacture a new 10 nm chip is around $170 million, almost $300 million for a 7 nm chip, and over $500 million for a 5 nm chip.

What Lies Ahead: Beyond Silicon

In historical terms, 50 years is nothing. Yet the technological distance between 1971 and 2025 seems greater than between the Bronze Age and the Industrial Revolution. Technologies like wireless communication, cloud computing, quantum physics, and the Internet of Things (IoT) will converge to drive innovation in computing, enhancing efficiency, connectivity, and processing power.

Alternatives are emerging:

- Quantum computing: Quantum computers are based on qubits (quantum bits) and use quantum effects like superposition and entanglement to their benefit, thus overcoming the miniaturization problem.

- Specialized architectures: GPUs have been used for AI training for over a decade. In recent years, Google introduced TPUs (tensor processing units) to boost AI, and there are now over 50 companies manufacturing AI chips.

- Software optimization: Authors were able to achieve 5 orders of magnitude in speed improvements on certain applications just by optimizing coding methods.

Final Reflection: A Human Story

As Elizabeth Jones, Intel Corporation historian, acknowledges: “It’s a story of shrinking things. And as you shrink them, you increase the potential of the places that they can go and the things that they can pass.”

The history of computing is, at its core, a human story: of vision, ingenuity, and unbridled ambition. If in 1971 an entire room was needed for what today fits on a fingernail-sized chip, how far will we go in the next 50 years?

The answer may not lie only in silicon, but in the intelligence we are learning to build. As Intel’s historian observes: “We have so much more than a calculator in our pocket—thanks to the Intel 4004.” And that “much more” is just beginning to reveal itself.

The revolution continues, and we are both its architects and its privileged witnesses.