An open-source framework that enables large language models to browse, extract, and write reports with deep web research capabilities

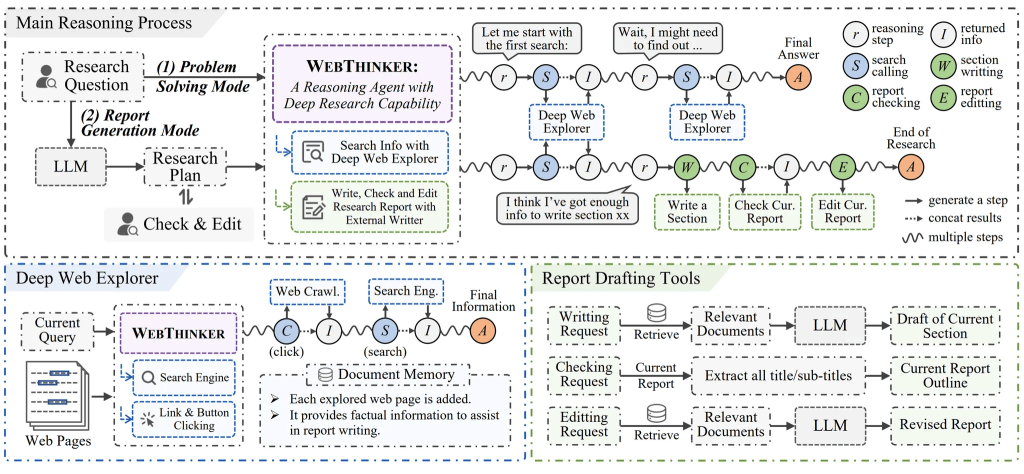

A new open-source project, WebThinker, developed by the RUC-NLPIR team at Renmin University of China, is making waves in the AI infrastructure and sysadmin community. Designed as a deep research framework, WebThinker transforms traditional LLM-based workflows by empowering models to autonomously search the web, navigate content, extract information, and generate high-quality research reports—all in a single, end-to-end reasoning process.

This innovative toolkit is not just another RAG-based agent. It represents a shift toward agentic reasoning, where the model itself orchestrates actions such as searching, following links, drafting, and editing content as part of its internal task execution loop.

Why It Matters for Sysadmins

For system administrators and IT teams managing infrastructure for research, intelligence gathering, or internal knowledge automation, WebThinker presents a compelling use case. The framework can reduce operational time and cost in domains that demand structured information synthesis—from evaluating compliance reports to drafting security audits or internal technical documentation.

Its modular Python-based architecture, compatibility with modern serving stacks like vLLM, and support for tools such as Crawl4AI make it sysadmin-friendly and easy to integrate into existing ML inference pipelines.

Key Features of WebThinker

- Autonomous Deep Web Exploration: The system allows LLMs to perform intelligent navigation—clicking through links, interacting with buttons, and issuing follow-up queries to dive deeper into content.

- Think-Search-Draft Workflow: WebThinker enables real-time knowledge acquisition and uses the gathered information to generate coherent, context-aware sections of technical or scientific reports.

- Tool Integration: LLMs are equipped with dedicated tools for:

- Drafting report sections

- Reviewing and verifying content

- Rewriting or expanding segments

- ZFS-like Precision in Task Execution: With task caching, search path tracking, and evaluation support, the system behaves predictably under heavy, structured workloads.

Benchmarks and Results

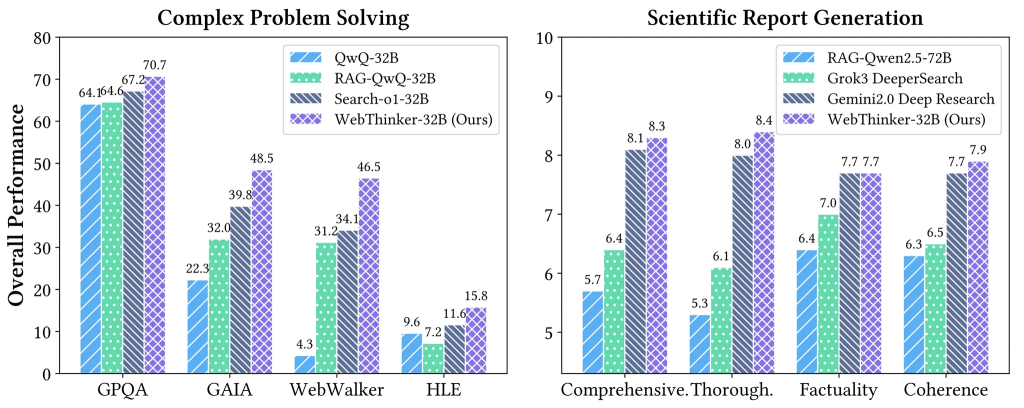

In academic evaluations, WebThinker outperforms many of its peers (including Grok-3 and Gemini 2.0 Deep Research) on reasoning-intensive datasets like GPQA, GAIA, WebWalkerQA, and the highly challenging Humanity’s Last Exam (HLE).

Its flagship model, QwQ-32B, excels in both precision and content coherence. For sysadmin teams involved in AI-assisted report validation or automated reasoning systems, this brings measurable gains in task reliability and turnaround.

Deployment: What You Need

WebThinker supports:

- Python 3.9+ and Conda-based environments

- Large models such as QwQ-32B and Qwen2.5-32B-Instruct

- Model serving via vLLM (with optional auxiliary model support)

- Web crawling with Bing Search API and enhanced parsing through Crawl4AI

You can run the system in multiple modes (e.g., single-question solving, benchmark evaluation, or full report generation). It also includes tools for automatic evaluation using LLMs like GPT-4o or DeepSeek-R1.

Sysadmin Use Cases

- Automating internal documentation and compliance reports

- Enhancing internal knowledge bases with up-to-date external data

- Performing security research with multi-page web exploration

- AI-assisted training material generation

- Lightweight knowledge synthesis in edge AI environments

Project page: https://github.com/RUC-NLPIR/WebThinker

arXiv paper: https://arxiv.org/abs/2504.21776

License: MIT

For sysadmins managing high-demand AI workloads or looking to build internal research agents, WebThinker represents a next-gen open-source tool with real-world applicability and enterprise-grade potential.