Motia aims to solve a long-standing backend pain point: the fragmentation across API frameworks, queue managers, schedulers, streaming engines, workflow orchestrators, AI agent runtimes, and observability/state layers. Its proposition is to unify everything around one core primitive: the Step. Much like how React simplified front-end development with components, Motia tries to redefine the backend with Steps that are auto-discovered, wired up automatically, and ship with observability and state from day one.

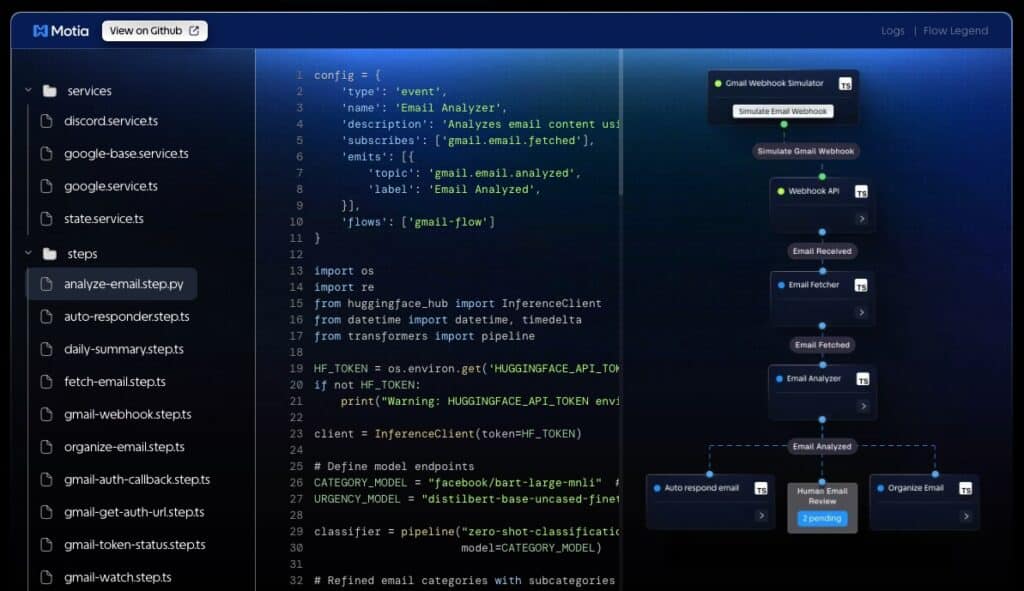

The project —available on GitHub— presents itself as a polyglot runtime with stable support for JavaScript, TypeScript, and Python (Ruby in beta, Go “coming soon”), plus a visual Workbench to debug, trace, and understand how events and state flow once an endpoint fires or a scheduled task runs. The cloud flavor is in beta (Motia Cloud) and the CLI promises a “under 60 seconds” bootstrap.

One core primitive: the Step

In Motia, a Step is just a file with a config object and a handler. The runtime auto-discovers these files and builds the connections for you:

- Built-in Step types:

api: HTTP endpoints (REST).event: topic subscriptions (background processing).cron: recurring scheduled jobs.noop: manual triggers or external integrations.

A minimal example tells the story: an API Step publishing a message.sent event and an event Step consuming it. With two files you get an endpoint, a queue, and a worker — no extra frameworks required. That’s the pitch: less glue, more focus on business logic.

Polyglot, with observability baked in

Motia encourages mixing languages in the same flow (e.g., TypeScript for APIs and Python for heavy compute/ML) and visualizing dependencies between Steps: who emits, who subscribes, how long each hop takes, and how state evolves. The Workbench includes traces, logs, metrics, and a visual debugger that shows what happened after every invocation.

Notable out-of-the-box bits:

- REST validation from the start.

- Event-driven architecture without gnarly config.

- Zero-config local dev and a unified CLI:

npx motia@latest createthennpx motia dev. - AI-assisted development guides compatible with Cursor and others (the AGENTS.md format).

From APIs to AI agents (and back)

Another key piece is agentic AI. Motia isn’t trying to be an ML framework; it integrates Node/Python AI libraries and connects agents with APIs, queues, and streams using the same Step model and the same observability plane. The repo ships patterns and ready-to-run examples:

- ChessArena.ai (production-grade example): auth, multi-agent evaluation (OpenAI, Claude, Gemini, Grok), Stockfish in Python, real-time streaming, leaderboards, and deployment on Motia Cloud.

- More blueprints: Research Agent, Streaming Chatbot, Gmail Automation, GitHub PR Manager, Finance Agent — 20+ examples in total.

The idea is to write AI workflows like you write APIs: with deterministic Steps, tests/evals, and integrated monitoring.

Languages and roadmap

- Stable: JS, TS, Python

- In progress: Ruby (beta) and Go (coming)

Public roadmap (highlights announced):

- Python types, RBAC for streams, Workbench plugins, queue strategies, Reactive Steps, point-in-time triggers, core rewrite in Go or Rust, faster deploys, and built-in database support.

Getting started (quick start)

- Create a project

npx motia@latest create

Code language: CSS (css)A small wizard asks for template, project name, and language.

- Launch the Workbench

npx motia dev

# ➜ http://localhost:3000

Code language: PHP (php)From there you can inspect Steps, events, state, and traces.

How it compares to no-code, agent-only runtimes, or fully custom stacks

The repo includes a conceptual comparison. In short:

- No-code: easy start, but limited and often locked-in.

- Agent runtimes: powerful for AI, but often single-language, with limited visualization and probabilistic execution that’s hard to audit.

- Custom glue (microservices + queues + cron + observability): maximum control, but high cost and scattered tooling.

- Motia: code-first, polyglot, with a built-in Workbench, deterministic Step execution, and first-class agentic support.

A concrete example: cron + external services

Among the examples there’s a cron Step that walks Trello lists, flags overdue items, and posts to Slack, all with centralized traces. The takeaway: scheduled jobs, external APIs, and events are modeled the same way as an endpoint — and observed from the same console.

Who should care

- Startups wanting to go from prototype to production without rewriting queues/orchestration mid-flight.

- Polyglot teams (TS for edge/APIs, Python for AI/compute) wanting one runtime and shared traces.

- Workflow-heavy platforms (approvals, syncs, integrations) stuck in “back-glue” spaghetti.

- Agent builders who need instrumentation, state, and deterministic control around AI.

Frequently Asked Questions

Does Motia replace Express/FastAPI/Temporal/Sidekiq/Celery?

It’s not a drop-in for each tool; it’s a unified runtime. You can expose endpoints (like Express/FastAPI), process background jobs (queues/events), schedule cron, and compose workflows with the same primitive. The promise is less ad-hoc integration and coherent observability/state.

How does it fit with AI agents and RAG?

Agents are modeled as Steps. Chain contexts (API → agent → Python worker → result stream), trace each hop, and test with the same tools. Motia doesn’t replace your AI libs; it orchestrates them in an auditable flow.

Can I mix TS and Python in one flow?

Yes. That’s a headline feature: multi-language workflows. One Step can be TypeScript, the next Python (e.g., for compute or models). The Workbench keeps the timeline and logs across the whole path.

What about databases and resiliency?

The roadmap mentions built-in DB support and improvements to queue strategies and deploy times. Today, you plug in your services (DB/queues) and benefit from Steps, observability, and unified workflows; mid-term, the goal is to further reduce the infra glue.